I’m now attending a panel session aiming to highlight themes emerging from the conference. But first up a pitch or two. There’s an international series of learning analytics events 1 – 5 July starting at Stamford then following the sun. Sheila and Martin (Jisc CETIS) and I are pondering whether we could step up and run something in parallel, perhaps not that long though. It could be a contribution to some grass roots nurturing for the Learning Analytics Community in the UK if (and I think it’s a big if) we can find the right people. I’ve suggested we go through Planners and ask them to put the word out to other colleagues they know are involved in data. AUA would be another route. Jisc InfoNet and I are starting some engagement with professional associations representative of UK staff groups / job roles and I’ll include this to see what response it gets.

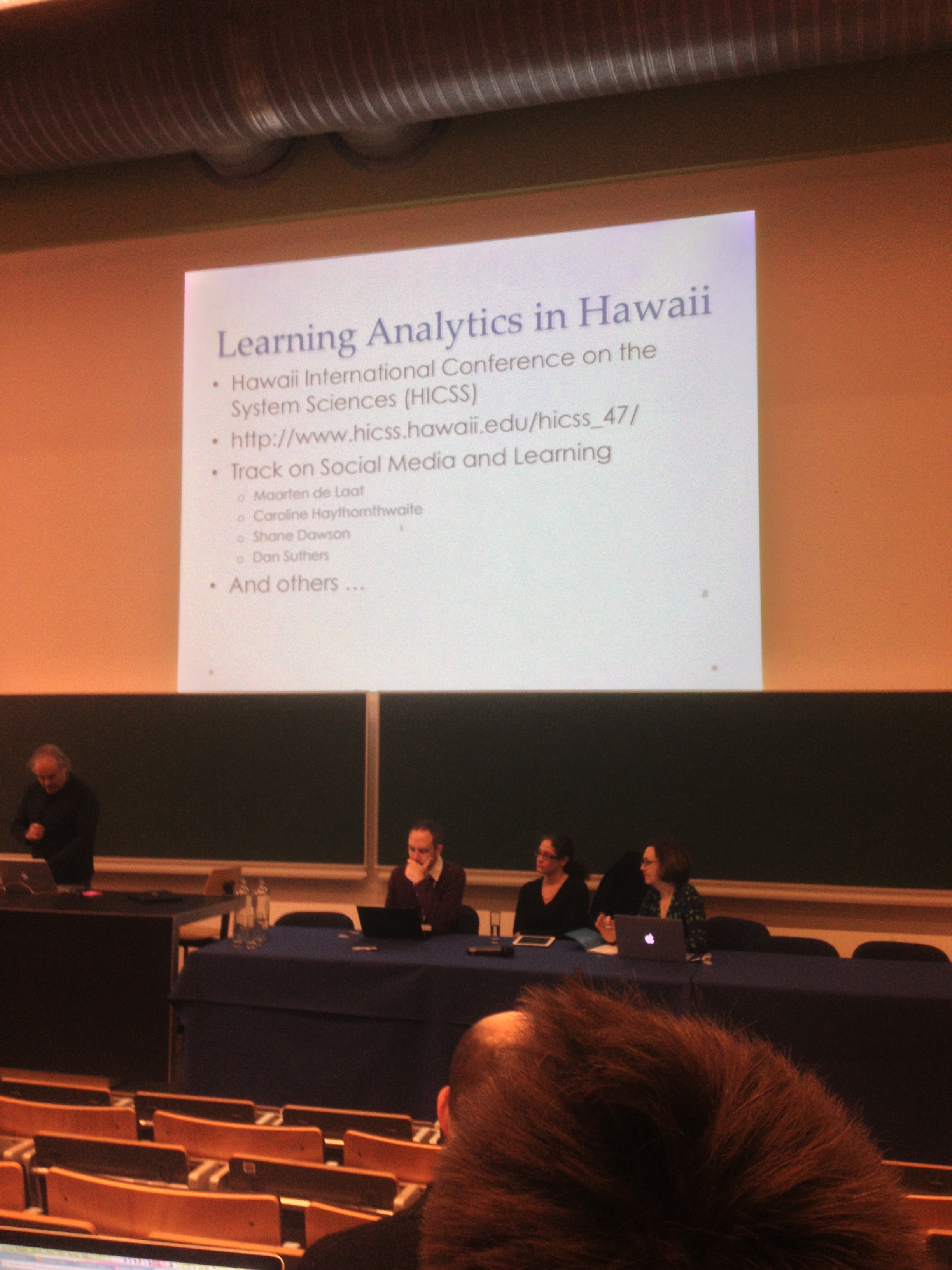

We learned last night that LAK14 will be hosted by Indianapolis so presumably Nascars’ will be in abundance but not Jisc programme managers (I’m very fortunate to be here in Leuven, it’s getting tougher to pass a business case for overseas locations). Dan Suthers (who pulled me into the panel yesterday) pitched this even more exotic event too;

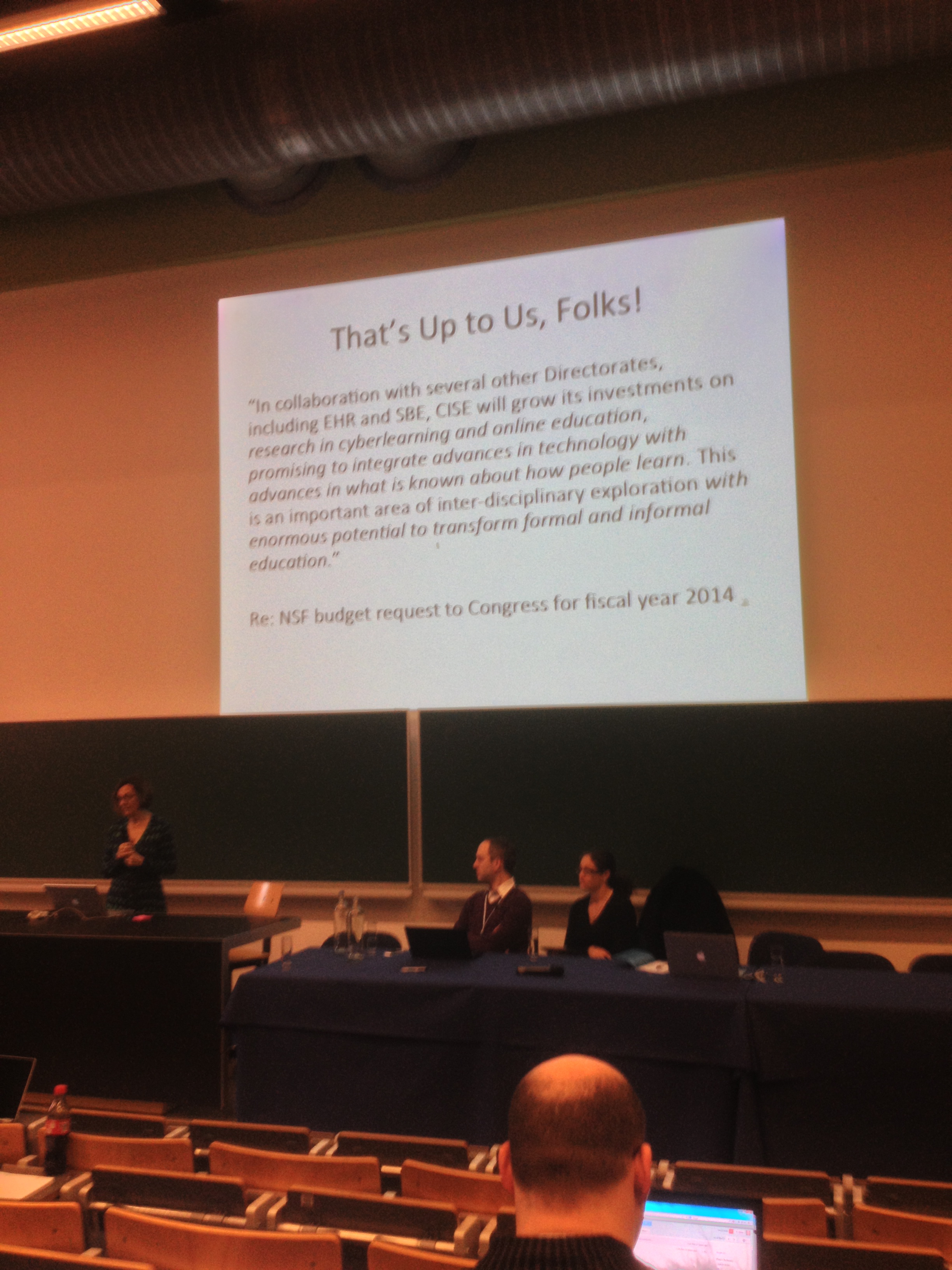

Out of the blocks early this morning and we have the first paradigm shift of the conference. This is regarding big data and reinventing the classroom. NSF is reporting a healthy increase in their budget with cyber learning and online education being highlighted as key investment areas.

I threw down my own challenge yesterday on the panel session I was a member of. That was based around the need to coordinate an innovation –—> embedding cycle. I noted that the loop wasn’t closed yet and as such the likelihood of the research beginning to change daily practice is far from optimal. Here’s a summary of my points;

Focus on the benefits (which and to whom and how). Co Design and partnerships (include vendors, stakeholder bodies). Business cases for investment. Address policy and governance. Address organisational AND individual readiness issues. Keep up the grass routes innovation.

This seemed to go down well, though I don’t see the capacity here to coordinate. I had a chat last night with Doug Clowes and Tribal. I was impressed that Tribal have an innovation arm working to gather needs and expertise from their academic clients in order to do the heavy lift for them. So in that sense a shared service across academic Tribal clients undertaking the requirements / stakeholder needs / horizon scanning and taking academic theory and developments from incubation to reliable service. That’s the sort of thing I was pitching for.

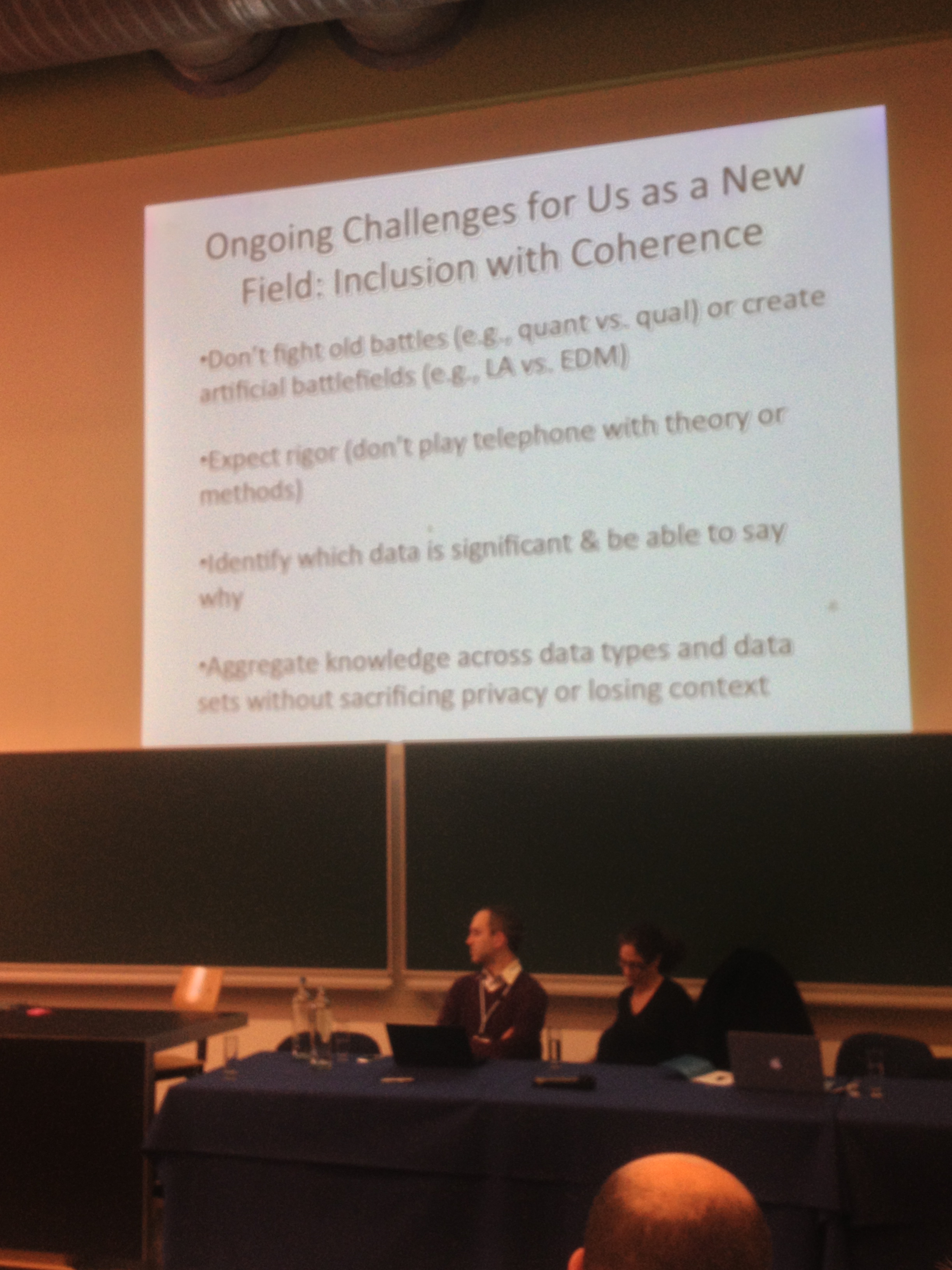

Here are some challenges from the speaker

Next up Paolo Bilkstein offers his perspectives on learning analytics research.

He notes that we teach what we can measure. If we don’t measure what we care about, it will never be taught. The intention here is to avoid optimising what we already teach. Rather we should design new ways to measure things and teach those.

Next observation is a bias toward cheap data. That we should work on both data analysis but also on new data collection techniques. The latter may not be such a quick win but is essential work.

We need to push the system to embrace multimodality and the social aspects of learning. It’s easy to collect data about student activity (that cheap data) but there are other interactions we shouldn’t ignore that are harder to capture. Acknowledging the social aspects requires changing the ways we collect and analyse data. This is evident in the Derby Dartboard I mentioned in an earlier post from the conference.

Next thought is that there are big political and economic interests at play but we need to keep an eye on the end game. Analytics might lead us to a future scenario that while educationally efficient and profitable, is not desirable. The notion of children sitting in isolation pressing buttons as the analytics system introduces course content based on learning performance is an extreme one.

And the last point? Disruption. The data analysts here must step outside their comfort zones and play the role of disruptor.

Our final speaker is Elisa Wise from Simon Fraser in Vancouver. I chatted with Elisa last night. She’s very Twitter / Social analytics research active. She asks ‘Do we really need another dashboard?’ which raised a few laughs.

Elisa suggests that instead of large overarching dashboards (or a large all encompassing analytics system) we need a series of smaller ones fit to task. So smaller projects gives us the agility we need to achieve quality. Elisa is heavily into discourse analytics, the analysis of communication. I blogged on this area yesterday but didn’t see Elissa speak. She suggests that consequential validity is important, what implications and assumptions are embedded in the systems and how they will effect the way people teach. That the analytics will affect the pedagogy so diversity could be lost and shouldn’t be!

Keeping the people at the centre shouldn’t be substituted for algorithms and data. The conference has focused far more on the latter two. Data to empower people is a good thing (action based on sound recommendations) but also maintain their ability to add the human angle; creativity etc.

The standards and methods shouldn’t be overlooked. Tough to argue against that!

The panel have asked the audience ‘what blew your mind’ at the conference.

Clearly I’m in a room full of academics as neither panel of audience stuck to the question. Here’s one. The extent to which many talks were grounded in learning theory was seen as a wow factor in tandem with the concept of the imaginary learner.

Garbage in / garbage out is as valid as it ever were.

Nice I think if this conference had a track on applied research to target the multi disciplinary teams we see required to tackle widely applicable educational problems in addition to the niche research.

Linda Baer notes the importance of LAK pure research to keep ahead of the demands from politicians and policy makers demands for efficiencies and effectiveness (but by big data which seemed a little unnecessary). The panel felt that being at the bleeding edge is not what academia is good at. Huh?! If not the researchers then who???! Good that another panel member noted the need for the community to be more proactive rather than reactive to these sorts of demands and opportunities to address them via Learning Analytics.

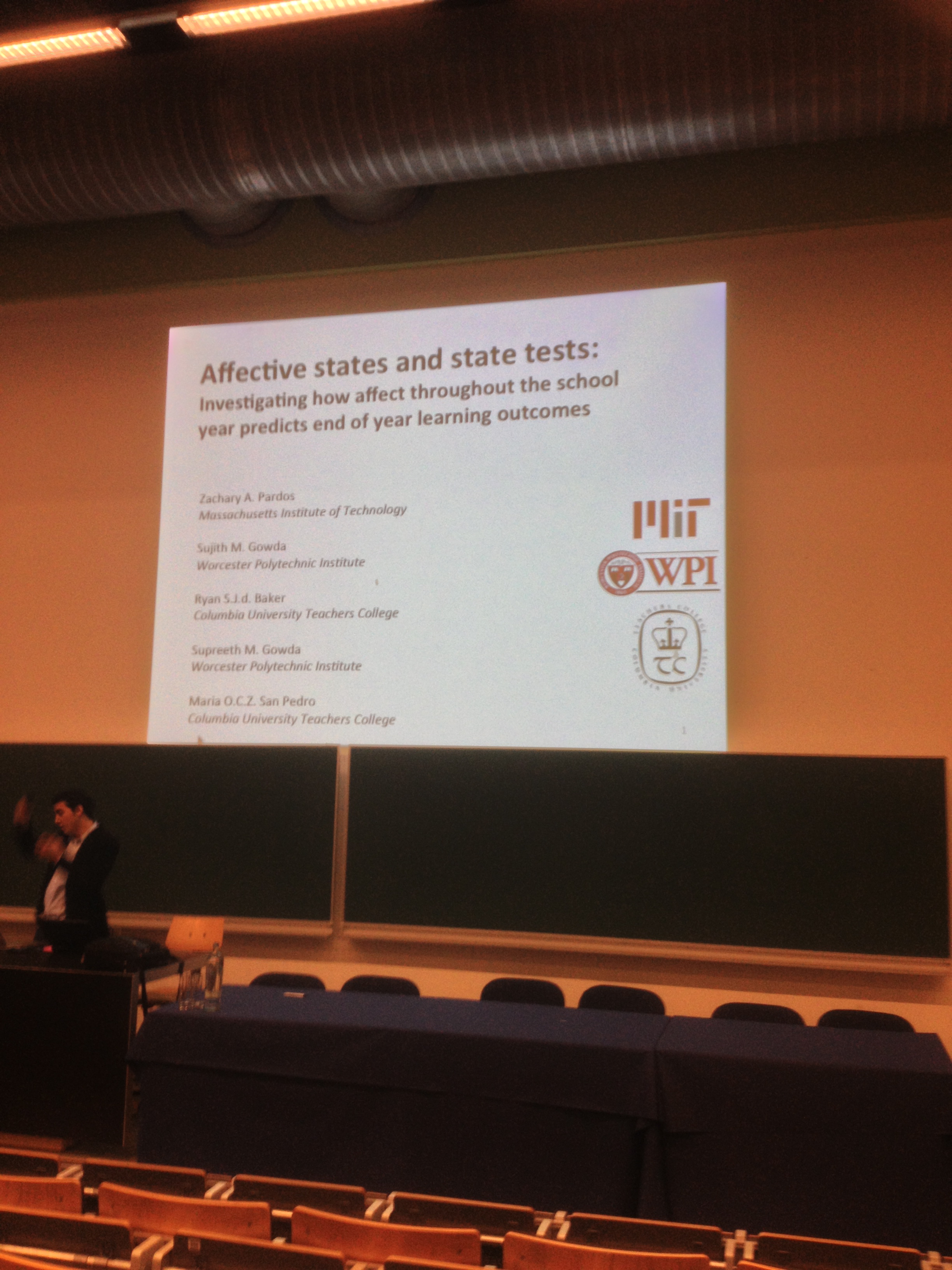

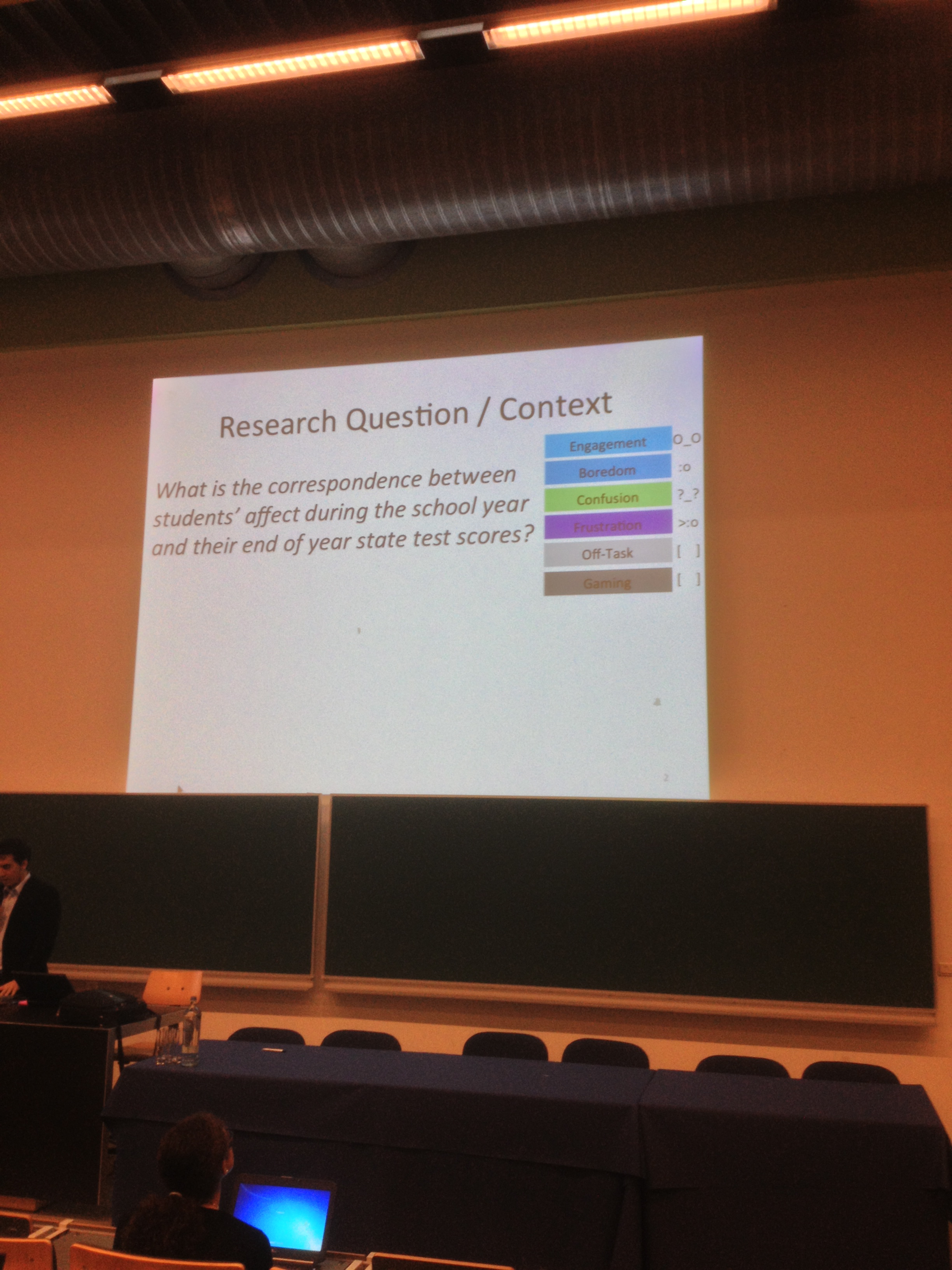

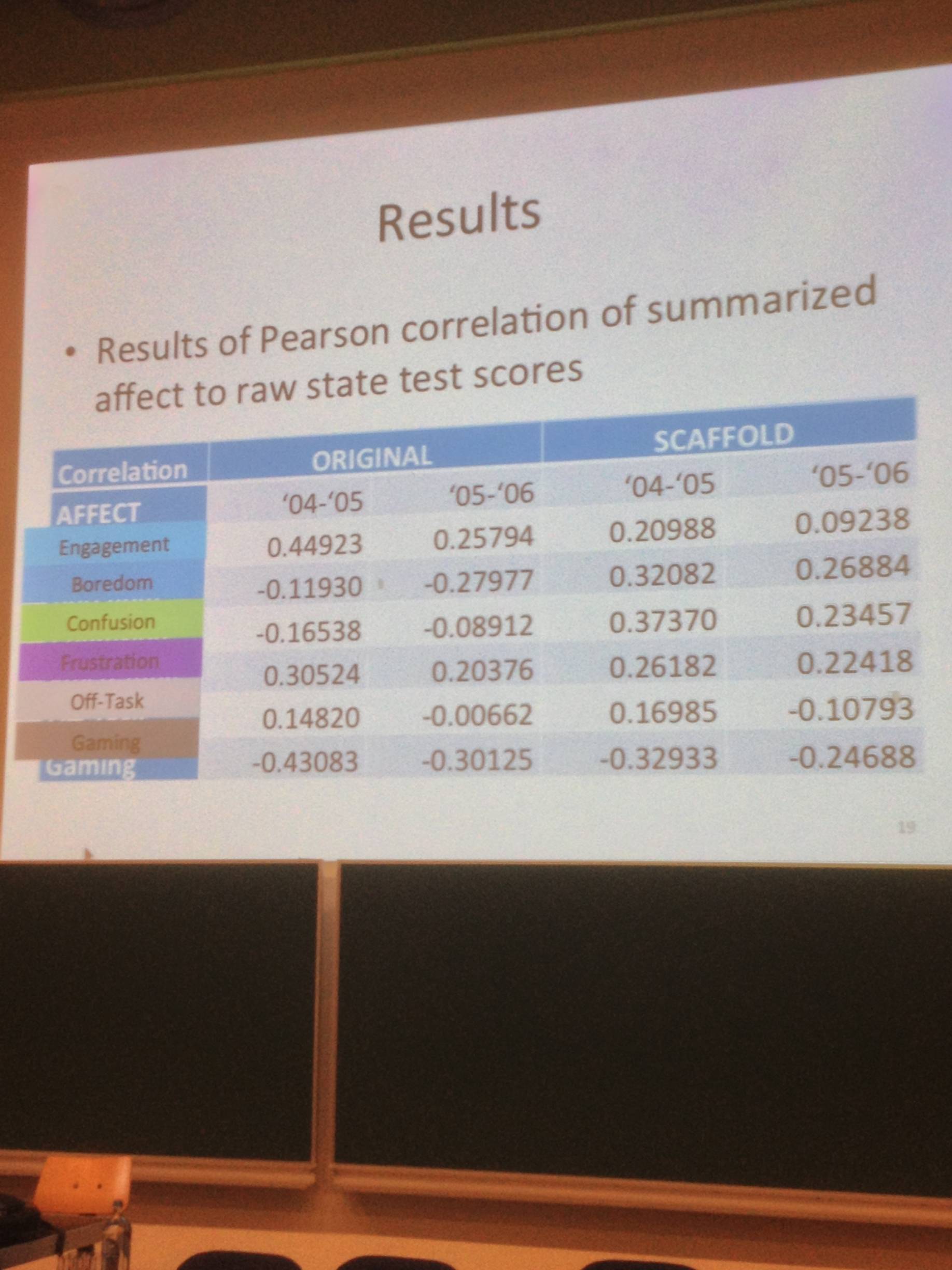

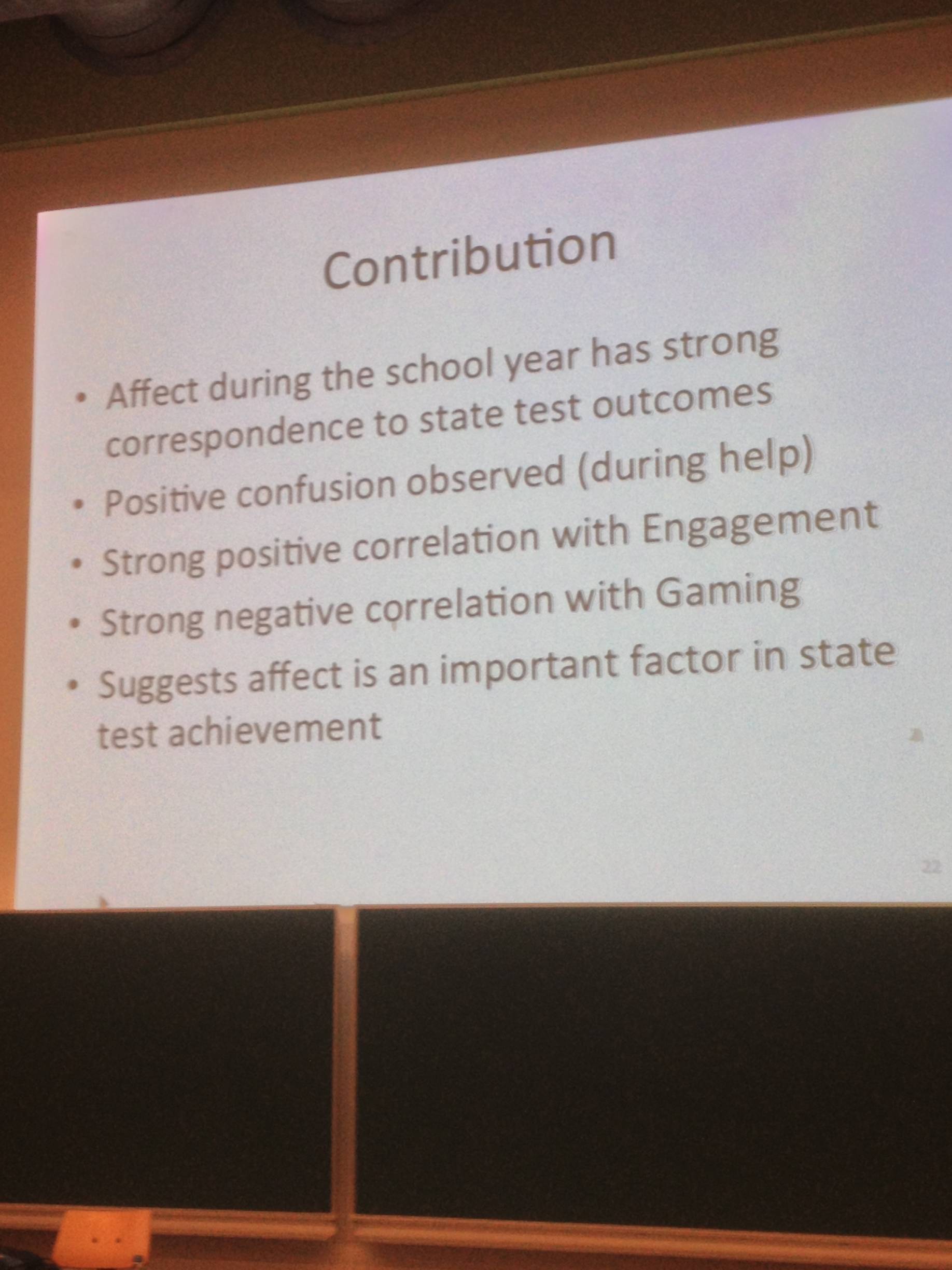

We’re into the penultimate session of the conference and it’s Zac talking about correspondance between student behaviour states and ‘affect’ states and their end of year grade performance. If I’m more engaged in the school year will I get a higher grade? If I’m more bored during the year will I have a higher test score?

Note ‘Affect’ is on the right of the slide; engagement, boredom, confusion, frustration, off task, gaming. So in terms of the actionable insight, if I’m bored the system might offer a particular activity to address this.

The reasons to measure ‘Affect’ correlation with state tests include teaching to the test. Once again we hear that ‘we teach what we can measure’. If affect has a strong correlation we should teach to include affect.

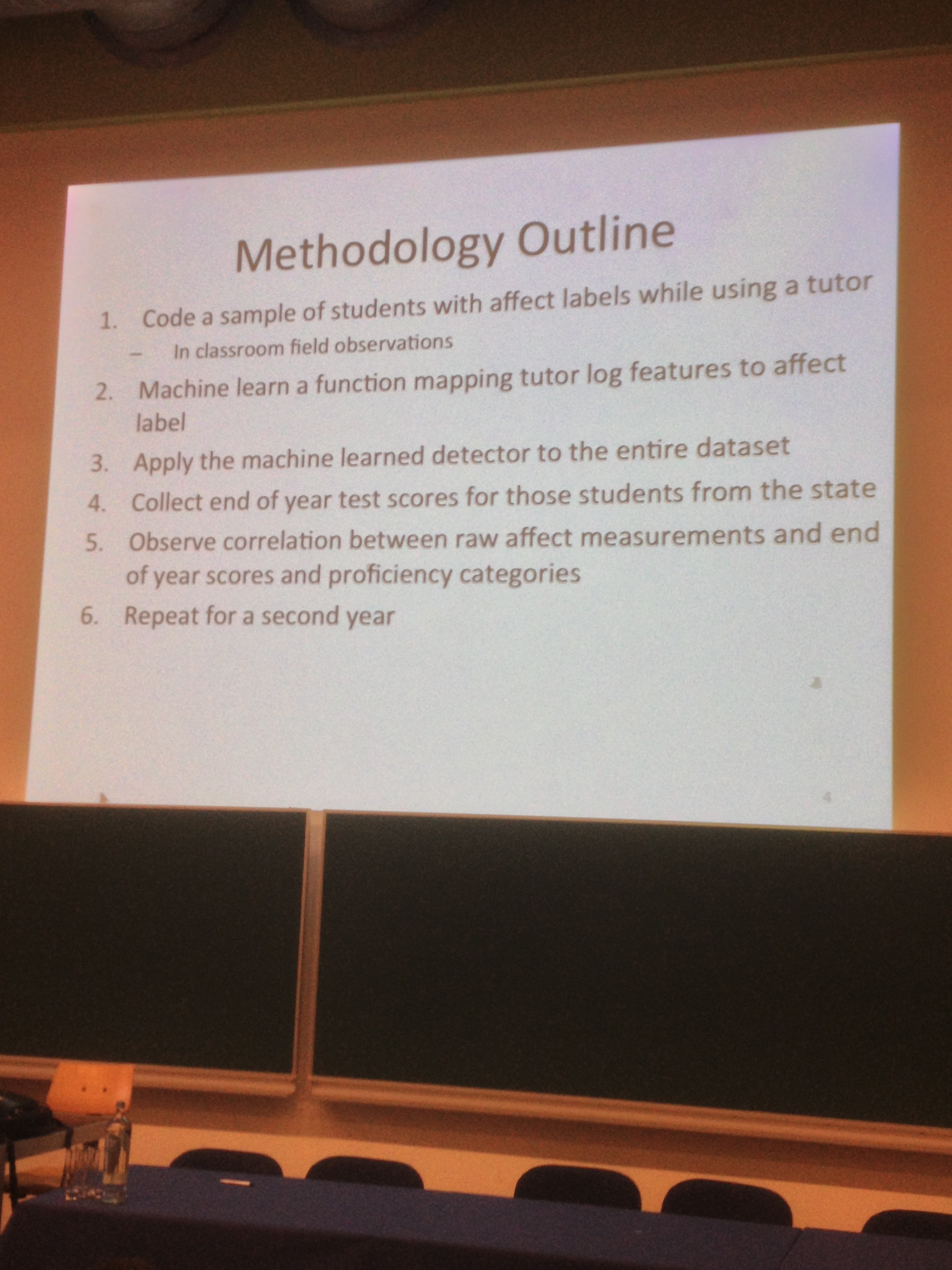

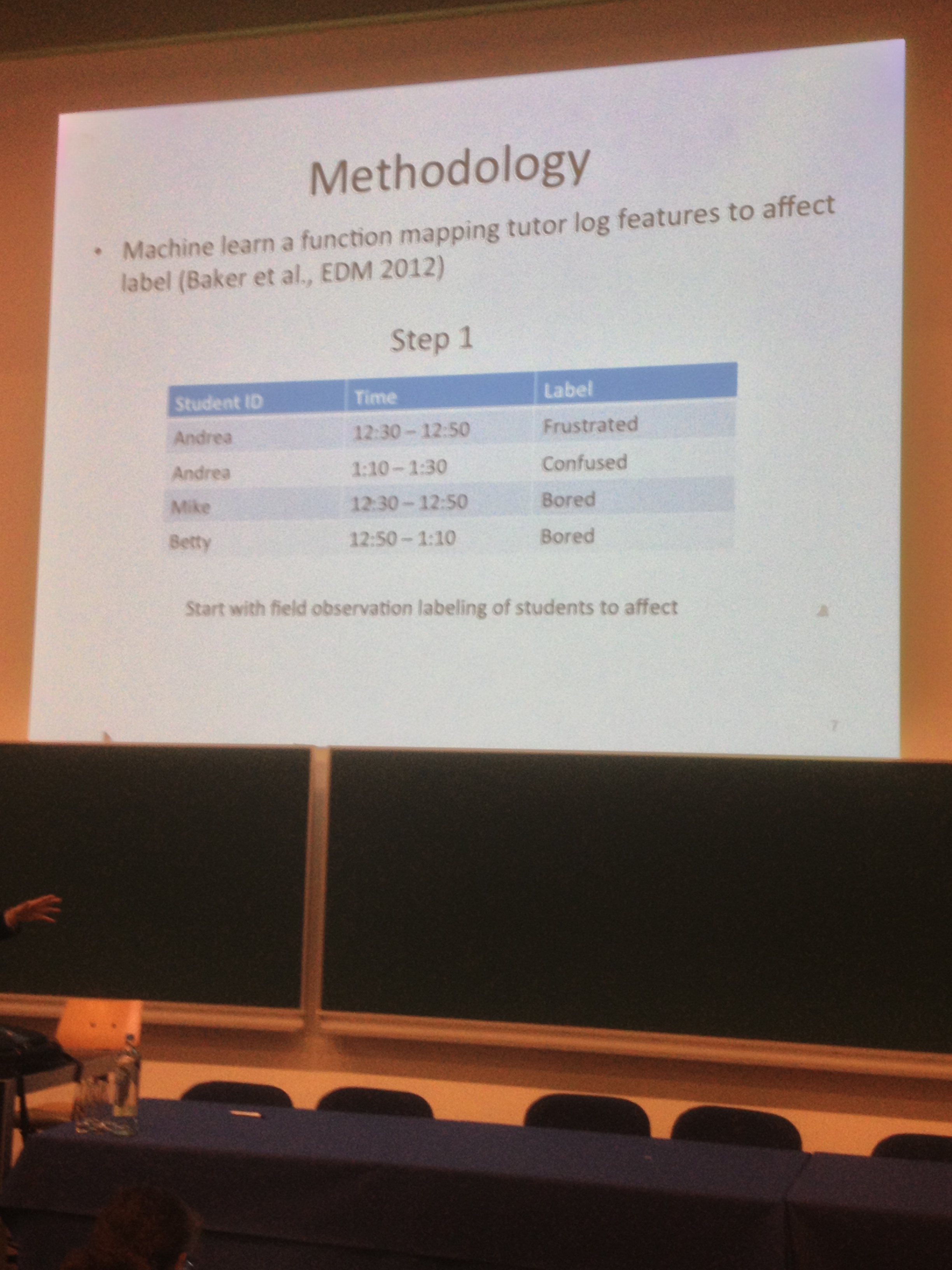

Here’s the methodology

Students were coded with affect labels (see earlier blog yesterday afternoon for more on this technique). 3075 observations were made of 229 students along the lines of

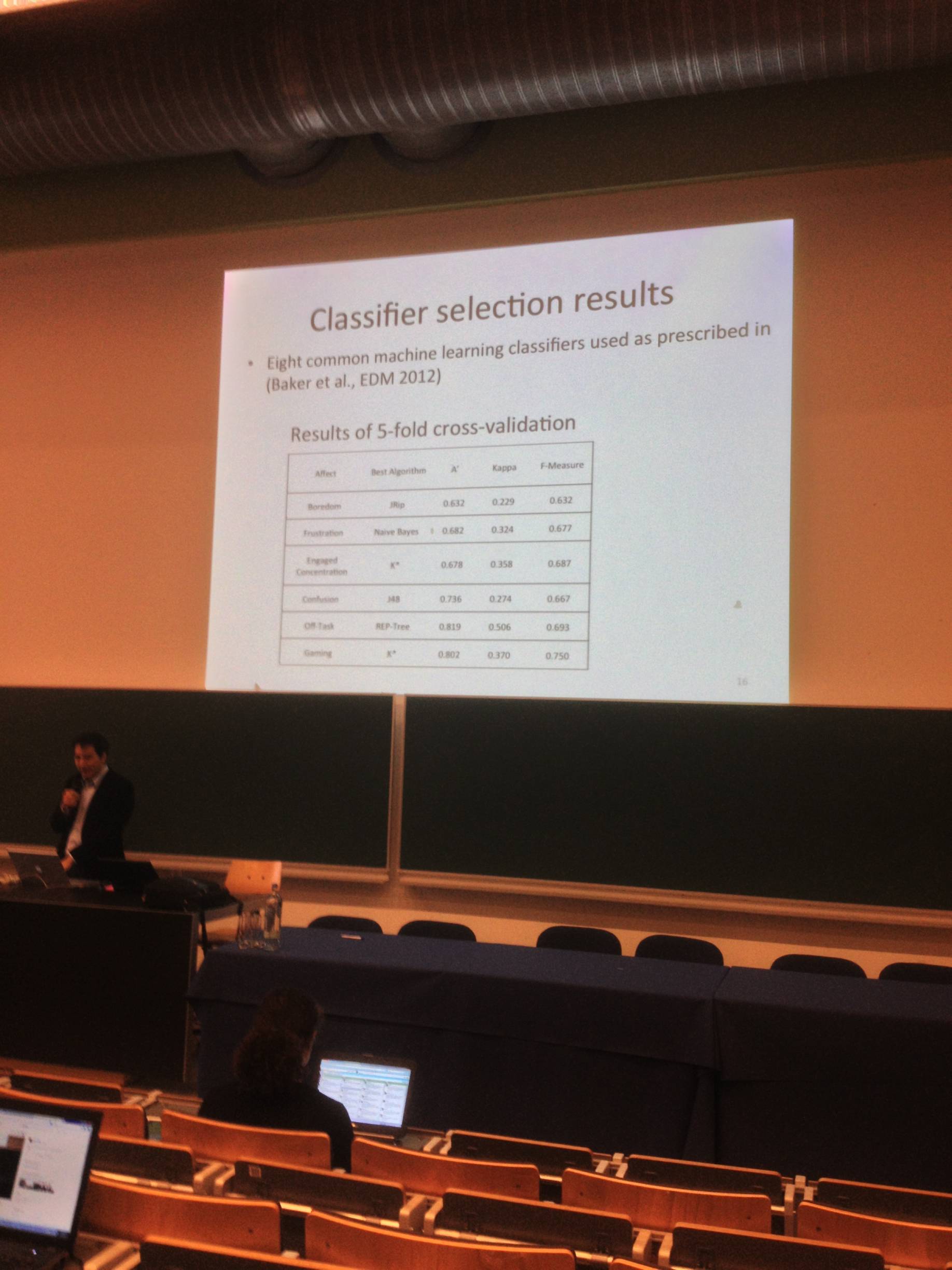

Kappa values are king here at LAK 13. Here are some of those;

Insert Image

The goal was to correlate affect with state test score. The results (original is student attempt, scaffold is attempt after intervention.

Negative means poor correlation, positive the opposite. So the table shows all manner of things one wouldn’t have predicted without the analysis. Here’s a summary of the actionable insights form the analytics;

So does it pass the ‘So What’ test? Not really, because there was no description of actions taken. Clever stuff though.

Suffered a technical fail at this point so the blogging had to stop.

In summary We’ve heard about Visualisation to support awareness and reflection, sequence analytics, design brieifngs, multi disciplinarity, predictive analytics, MOOCs (I ducked those sessions), communication and collaboration, strategies, challenges of scale up, ethics and data, discourse analytics (I quite liked these), assessment analytics, recent and future trends (my panel), behaviour analytics and reflective plenaries. That was just during the day programme. The evening sessions ran through until late and offered the space and (in the case of the SURF dinner) the framework for further conversations. My thanks to the city of Leuven with it’s stunning educational architecture (dating back to the 1500s), our local host Erik Duval, the LAK organising panel, the talented speakers and participants.