What Can We Learn from Facebook Activity? Using Social Learning Analytics to Observe New Media Literacy Skills. June Ahn.

Now digital literacies are close to my heart. I’m helping oversee a Jisc programme in the area so keen to hear this talk. The aforementioned programme has been collating resources, advice and findings over the last 18 months and depositing them here. The project phase concludes July 13 while the 10 professional associations representing the majority of staff groups in UK HE runs until December. We have a webinar series free to attend with a back catalogue of recordings and will be promoting the work through professional association conferences in 2013.

So on with the LAK session on New Media Literacies and what we can learn from Facebook via the University of Maryland.

New Media Literacies are offered as any of the following;

Play, Performance, Simulation, Appropriation, Multitasking, Distributed Cognition (taking notes and sharing bookmarks and live blogging presumably) Collective Intelligence (effectively collaborating digitally) Judgement, Transmedia (the trajectory of stories via blogs, twitter and other platforms), Networking and Negotiation

Whereas the Jisc digital literacies were defined as;

ICT/computer literacy: the ability to adopt and use digital devices, applications and services in pursuit of goals, especially scholarly and educational goals information literacy: the ability to find, interpret, evaluate, manipulate, share and record information, especially scholarly and educational information, for example

dealing with issues of authority, reliability, provenance, citation and relevance in

digitised scholarly resources.

media literacy, including for example visual literacy, multimedia literacy: the ability to critically read and creatively produce academic and professional communications in a range of media

communication and collaboration: the ability to participate in digital networks of knowledge, scholarship, research and learning, and in working groups supported by digital forms of communication

digital scholarship: the ability to participate in emerging academic, professional and research practices that depend on digital systems, for example use of digital content (including digitised collections of primary and secondary material as well as open content) in teaching, learning and research, use of virtual learning and

research environments, use of emergent technologies in research contexts, open

publication and the awareness of issues around content discovery, authority,

reliability, provenance, licence restrictions, adaption/repurposing and assessment

of sources.

learning skills: the ability to study and learn effectively in technology-rich environments, formal and informal, including: use of digital tools to support critical thinking, academic writing, note taking, reference management, time and task management; being assessed and attending to feedback in digital/digitised

formats; independent study using digital resources and learning materials

life-planning: the ability to make informed decisions and achieve long-term goals, supported by digital tools and media, including for example reflection, personal and professional development planning

This talk is an analysis of FB features and activities mapped to the aforementioned New Media Literacies. So friend lists, member pages, status, links/photos, videos, networks.

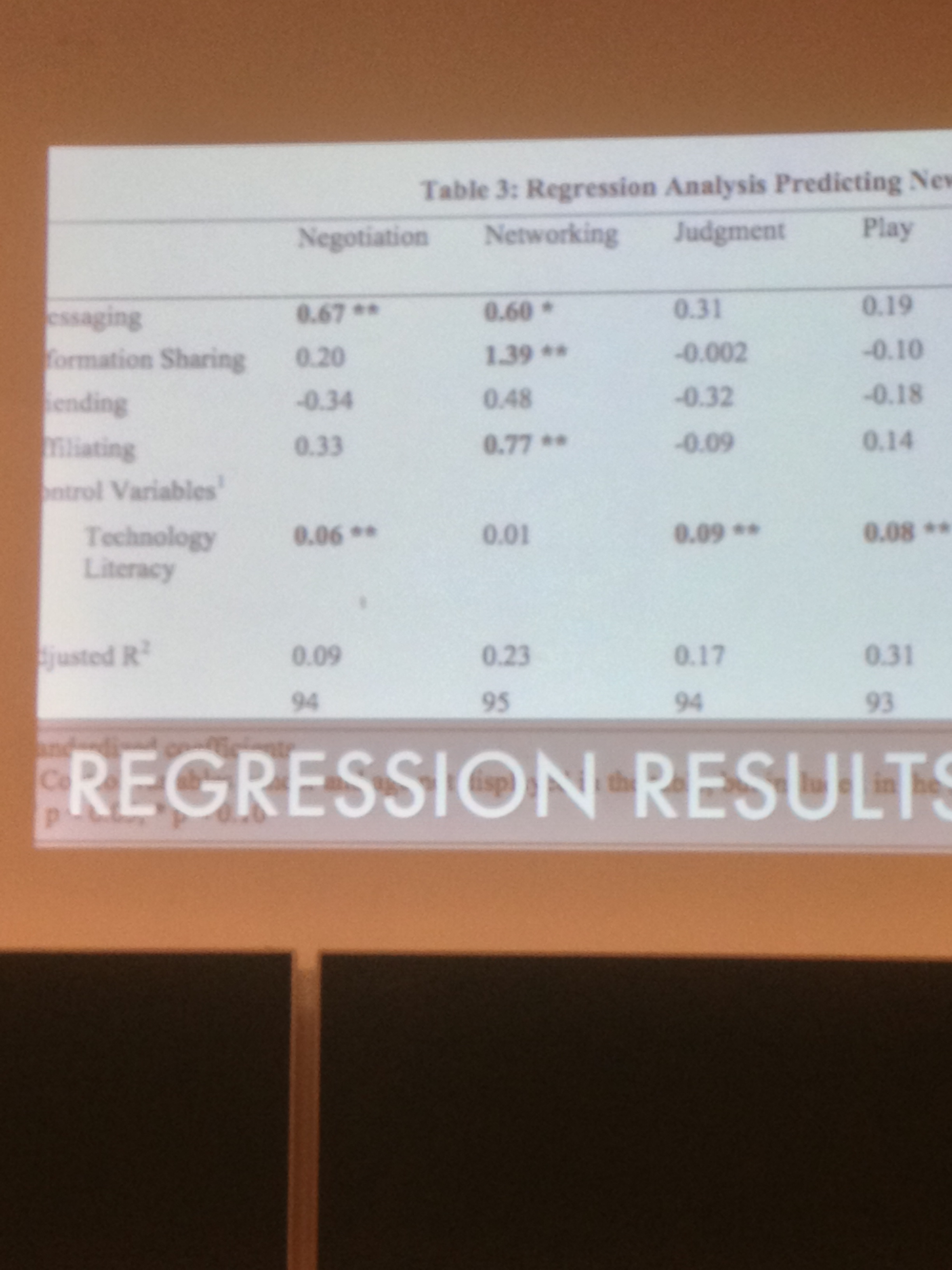

Here are the regression results

All very well but in the words of someone far wiser than me…. So what?

The notion here is that FB is often seen as a distraction. The study has shown that users of the various features are developing new medial skills (to a greater or lesser extent). I didn’t see any analytics in this.

Next up Improving retention: predicting at-risk students by analysing clicking behaviour in a virtual learning environment. Annika Wolff, Zdenek Zdrahal, Andriy Nikolov and Michal Pantucek.

One of my own projects this one from the OU and fundeed through the Jisc Business Intelligence Programme. The team developed predictive models to identify which students would benefit from an intervention. Data sources were limited to VLE, Assessment and Demographic historical data. Quite key this. It’s not quite a live service but has institutional finance to become so imminently.

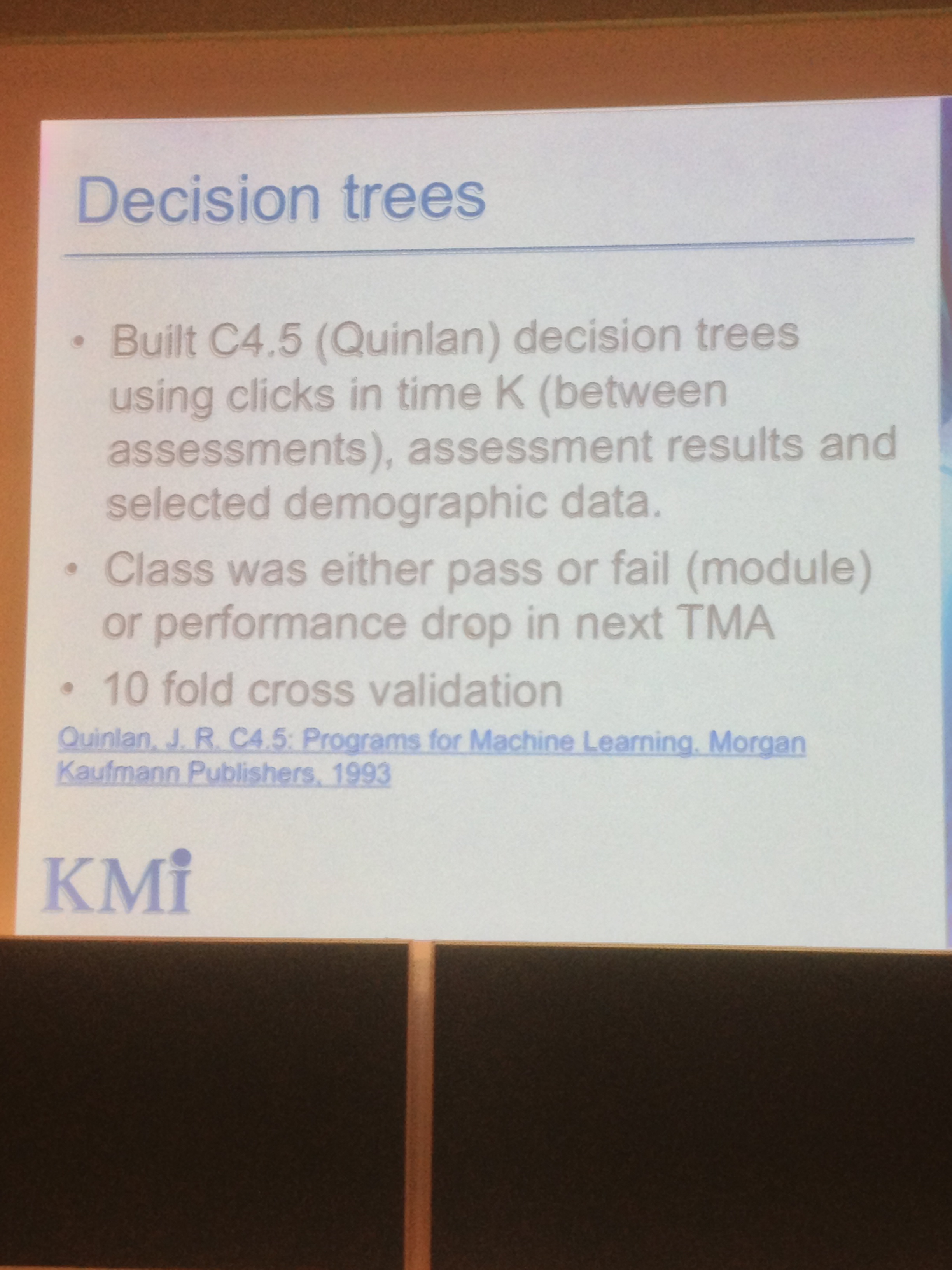

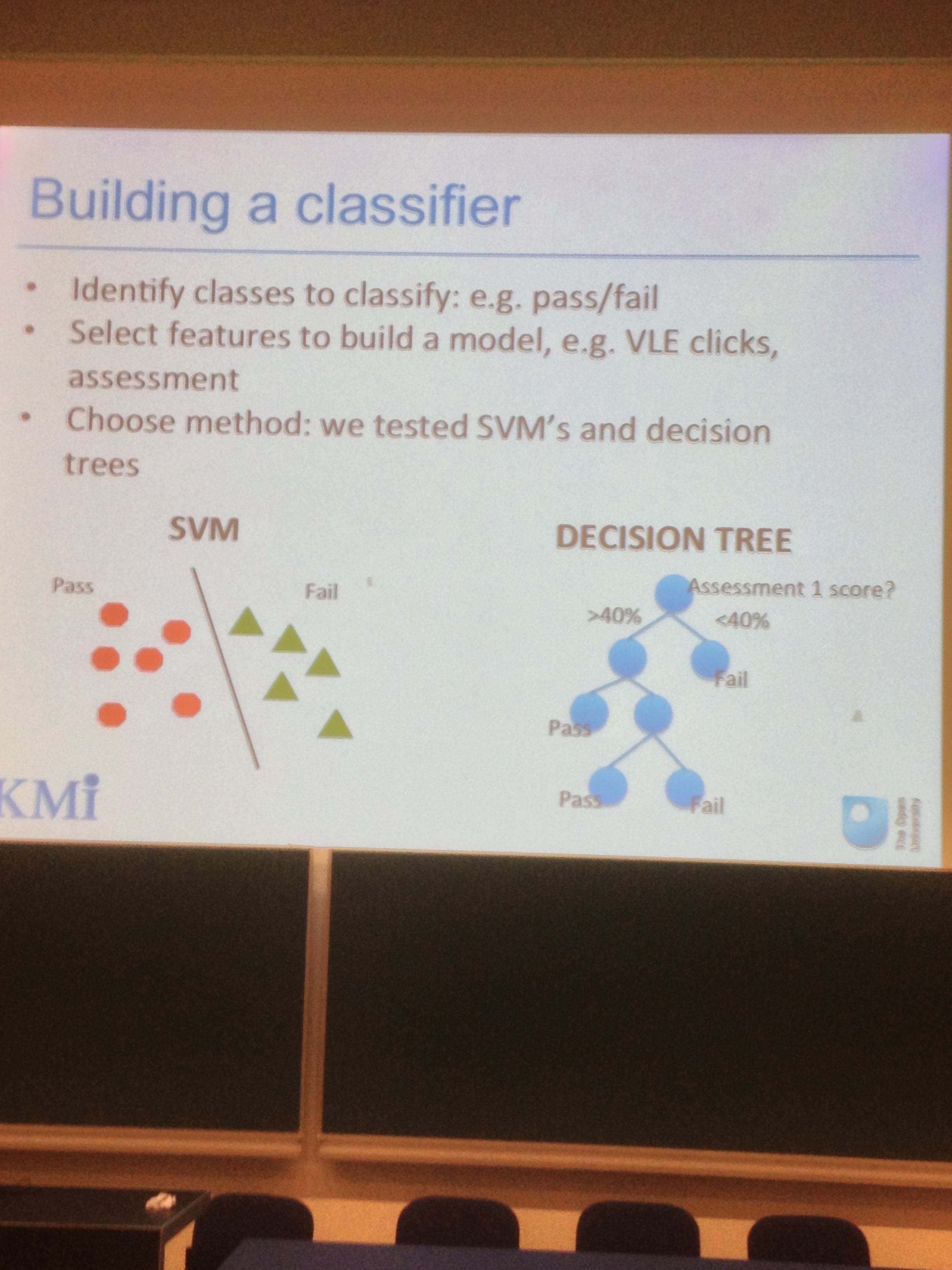

The number of clicks a student makes does not map directly to engagement. The project therefore built a number of classifiers. Here’s a slide to demonstrate that

The predictions in performance drop were extremely accurate. The models appear to be predict powerfully. The tam also note that after the third assessment then the Grade point Average (GPA) becomes the best indicator to use. Presume that’s because it’s more accurate and easier.

The most interesting finding was that a student who used to work in the VLE then stopped, or reduced activity compared to previous, all prior to an assessment was likely to fail that assessment.

I’ve popped the conclusion slide in below. Thanks Annika for the acknowledgement of funding via Jisc.

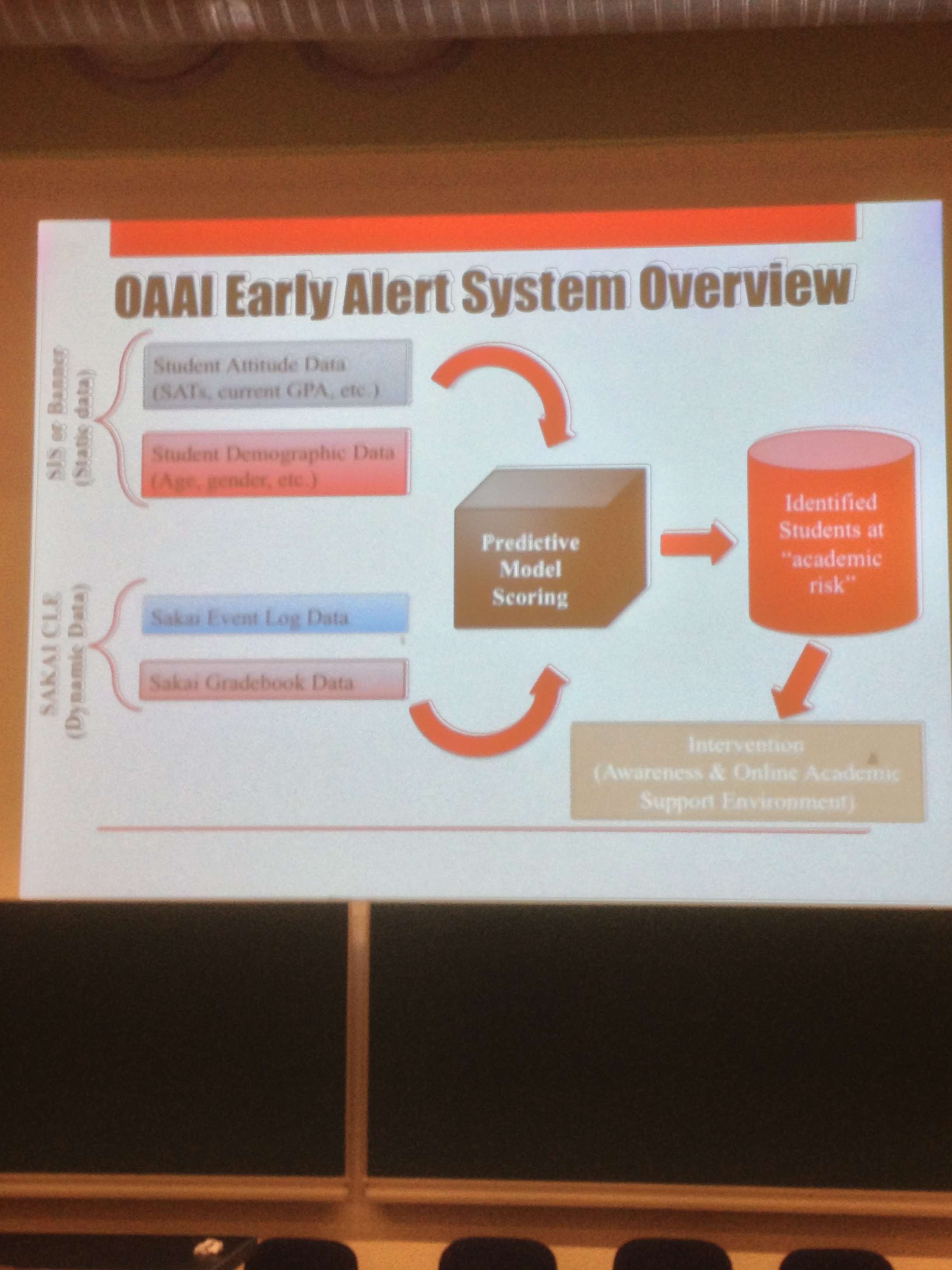

Last of the day it’s Open Academic Analytics Initiative: Initial Research Findings. Eitel Lauria, Erik Moody, Sandeep Jayaprakash, Nagamani Jonnalagadda and Joshua Baron. . I had lunch with Erik and discussed this open source implementation of a Purdue Course Signals sort of a thing. They have it patched into Sakai as well as BlackBoard and are about out of funding. It was supported by Gates foundation to the tune of USD 250K. He tells me he has stories of benefits realisation in terms of student retention and enhancements in efficiencies.

Question they sought to answer;

1. How good are predictive models?\

2. What are good model predictors?

SATs, GPA

Demographic

Sakai Event Log

Sakai Grade Tool

3. How portable were the models (across 4 different institutions, 36 courses at community colleges and traditionally black institutions so 1000+ students per semester)

The underlying data sources and system overview is summarised below

Much time was given over to the analysis and predictive modelling here by the analyst so not surprising. The performance measures used were accuracy, recall, specifity and precision. Unfortunately the speaker was rushing so I missed the definitions. Might be linked to Pentaho analysis.

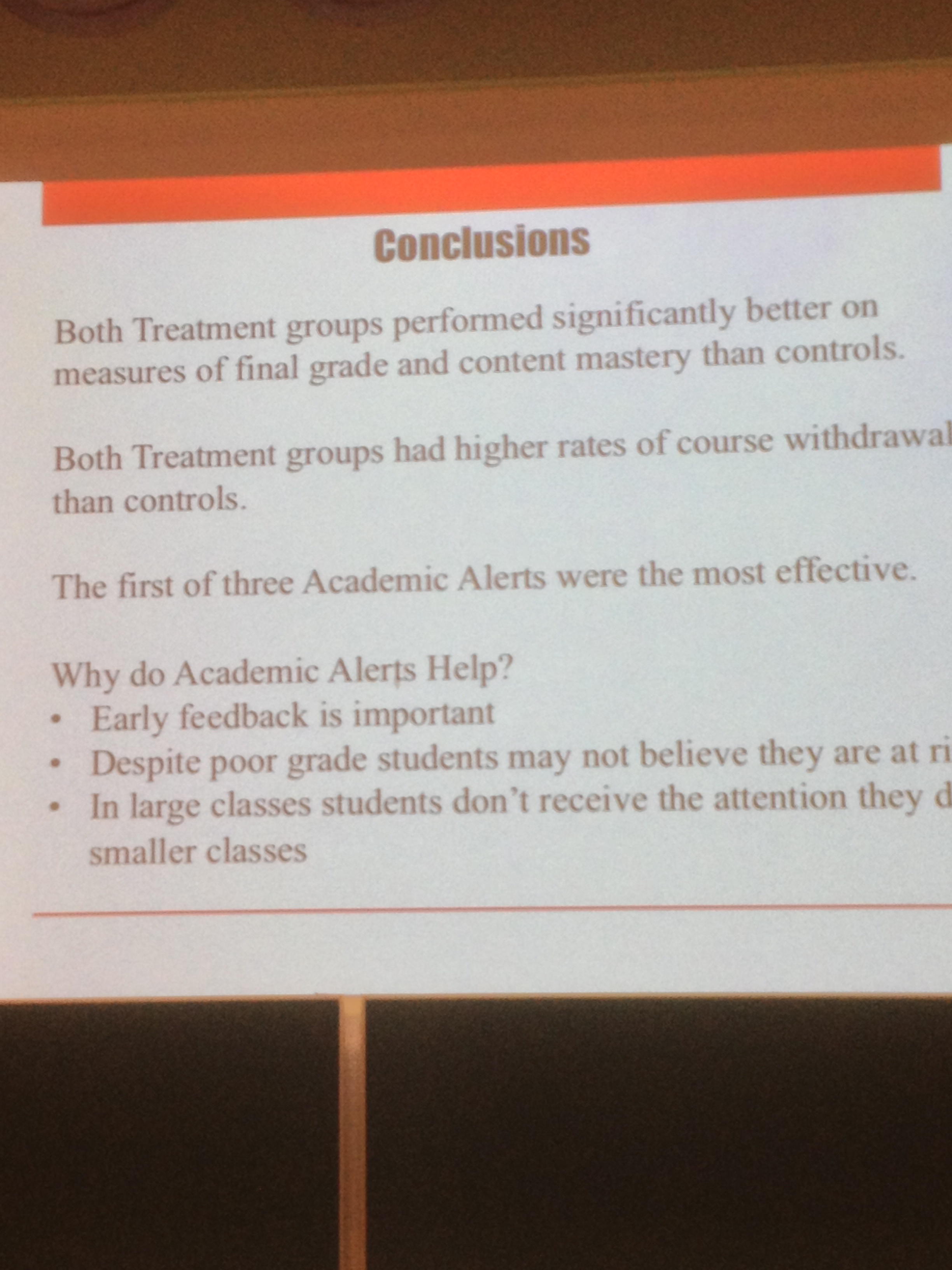

Erik next discussed the action taken from the insights. Control group (no intervention), awareness group (had messages sent), OASE Group (intensive interventions). Some great graphs showing evidence of GPA enhancements and withdrawal rate decline but skipped through to fast to capture.

Sadly a rushed presentation but some great evidence.