Apologies for the lack of blogs this morning. I had to work up a session for a panel this afternoon and join a teleconference for the day job. Here’s a photo of the Leuven town hall I took last night though.

Issues, Challenges, and Lessons Learned When Scaling up a Learning Analytics Intervention. Steven Lonn, Stephen Aguilar and Stephanie Teasley.

The intervention being briefly described is one of providing the tutor with student information intended to be used as the basis for conversation about performance, based on data and analytics of course. Scale up issues will follow.

By tagging onto the Business Objects corporate Business Intelligence Tool the project intended to ‘leverage’ resources and enhance uptake as the system scaled.

Nice to hear people doing this. There are clear overlaps between Analytics and BI and in my experience tapping into existing corporate services, governance etc is one way of gaining momentum quickly (though risky in terms of visibility, so be confident in your new service!)

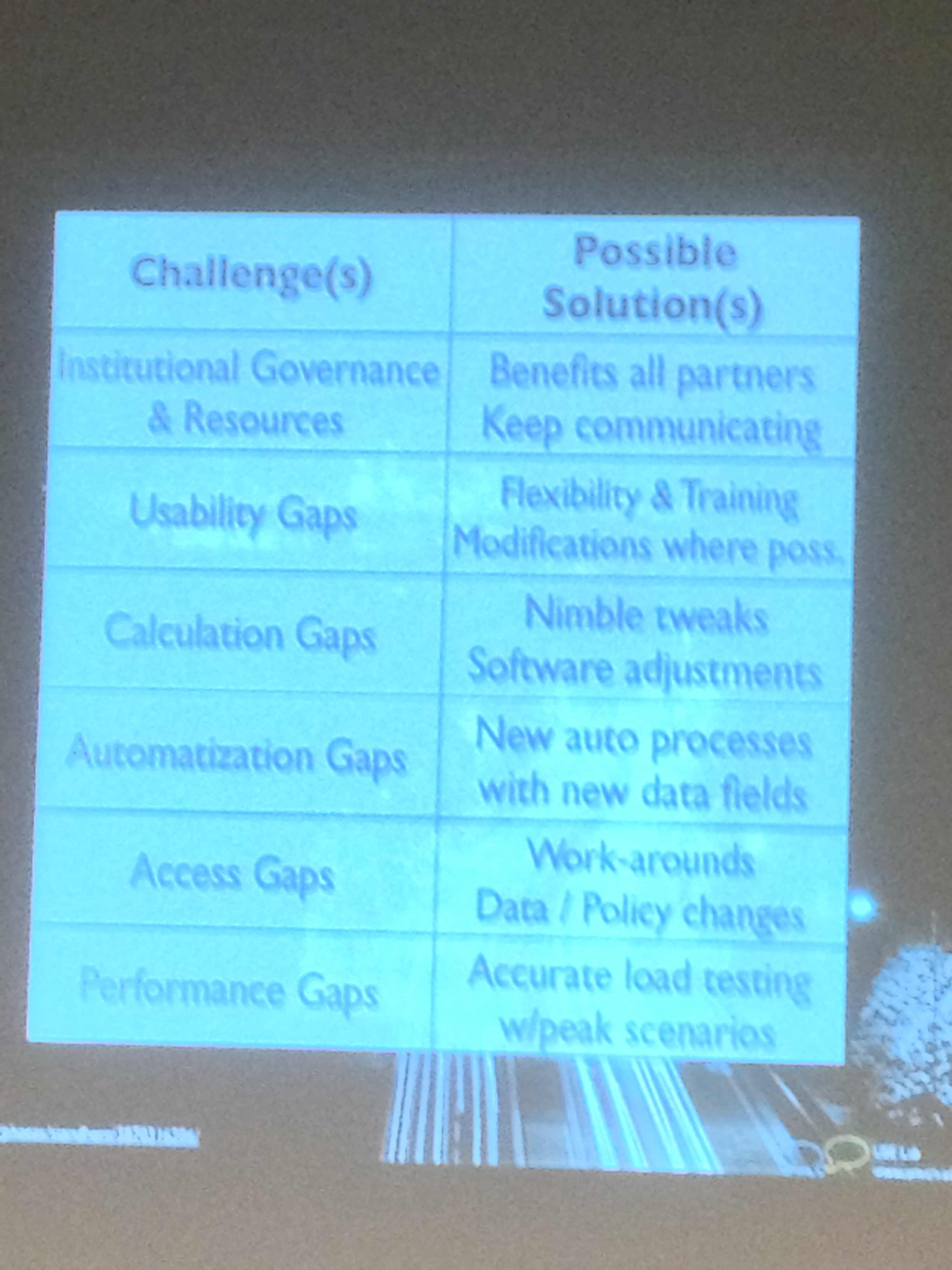

A number of teething issues were identified in terms of system performance, data visualisation, crashing the LMS with 12K people online (remember to load test before launching).

Business Objects was helpful, but the decision has been made to migrate to an alternative corporate service (the speaker is a little fast, I missed the name).

Here’s the summary slide

For me this was atypical in that the IT department were charged with running an analytics project with little or no steer and came to the analytics team to roll out their research project. So some serious clashes there I’d imagine but no detail given. It was a short paper so one can excuse the lack of detail of the analytics system features and I suspect missed a lot of the negotiation detail in order to achieve the linkages to Business Objects.

An evaluation of policy frameworks for addressing ethical considerations in learning analytics. Paul Prinsloo and Sharon Slade.

Institutions gather a lot of data baout their students. The data policies in place aren’t keeping up with the use cases for that data. The talk will discuss frameworks for ethics.

The team believe that informed consent is now a requirement so that data student provide is correct and current and that students understand what the data is used for.

The potential for stereotyping is high in analytics and steps to limit allegations of misuse and harm should be taken.

Collection, analysis and storage from data outside of the home institution is becoming prevalent in analytics. Care must be taken to gain consent, protect the data, ensure the use cases are transparent and that misuse and harm are addressed.

Quite a lot of repetition in this one. Jisc commissioned a report on Risks and Ethics for Analytics which is rather good and freely available.

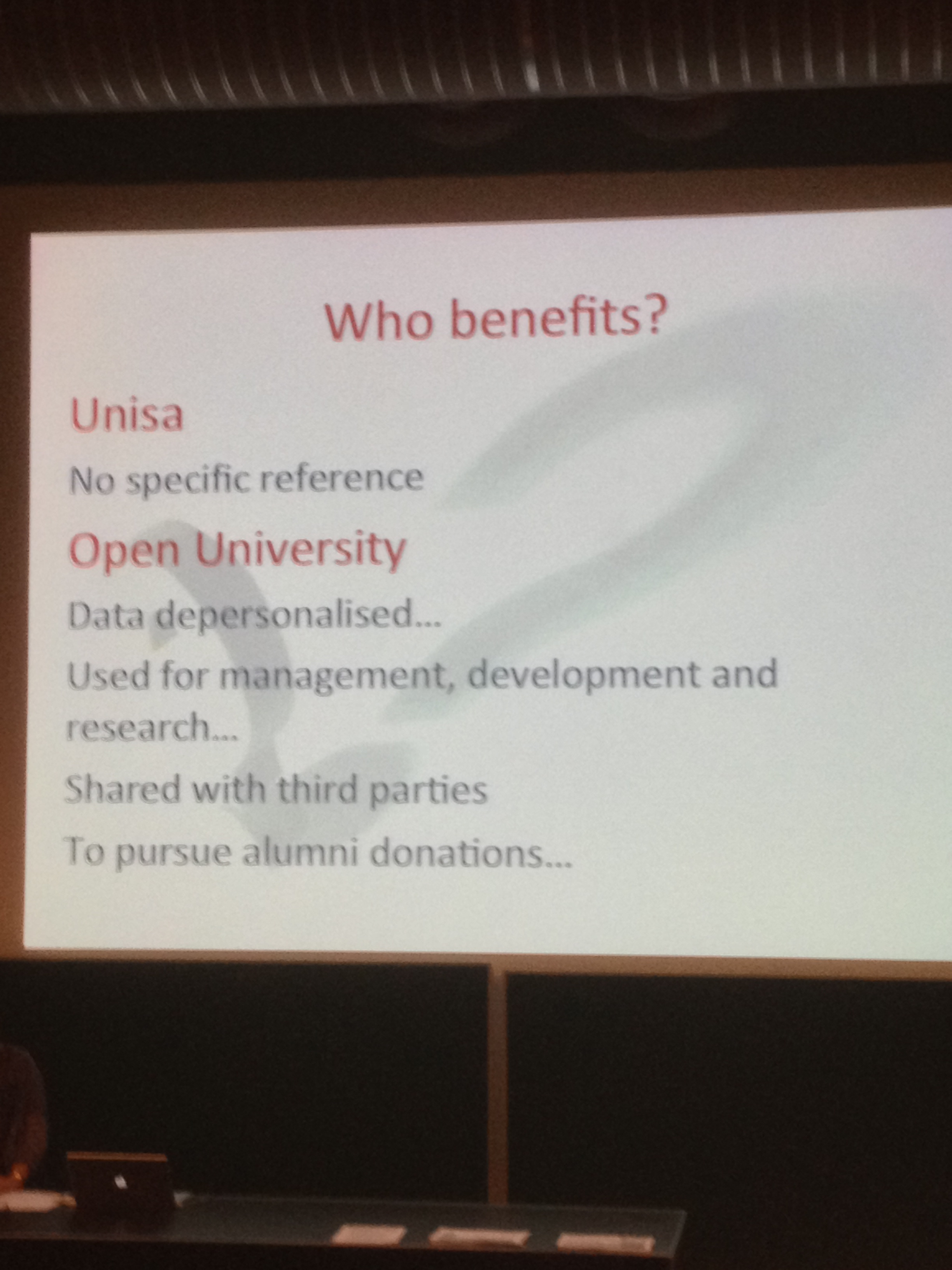

Here’s a slide on Beneficiaries

It’s not intended as a good practice example though!

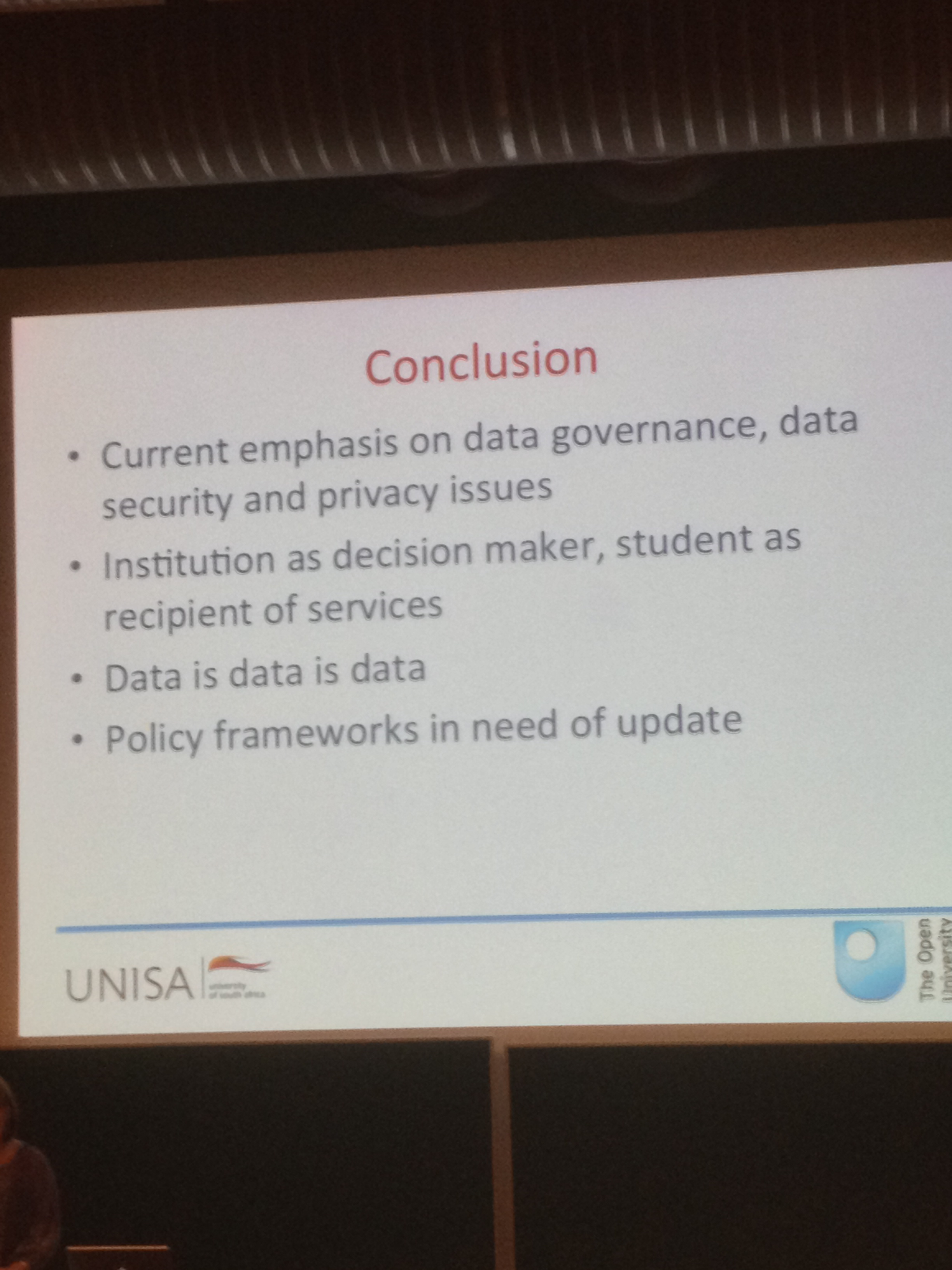

The rest of this talk was a lot of verbal reporting on analysis of policy examples at the OU and Unisa to highlight the aforementioned issues, or the gaps. In conclusion:

Here’s a slide from an entirely different presentation that caught my eye

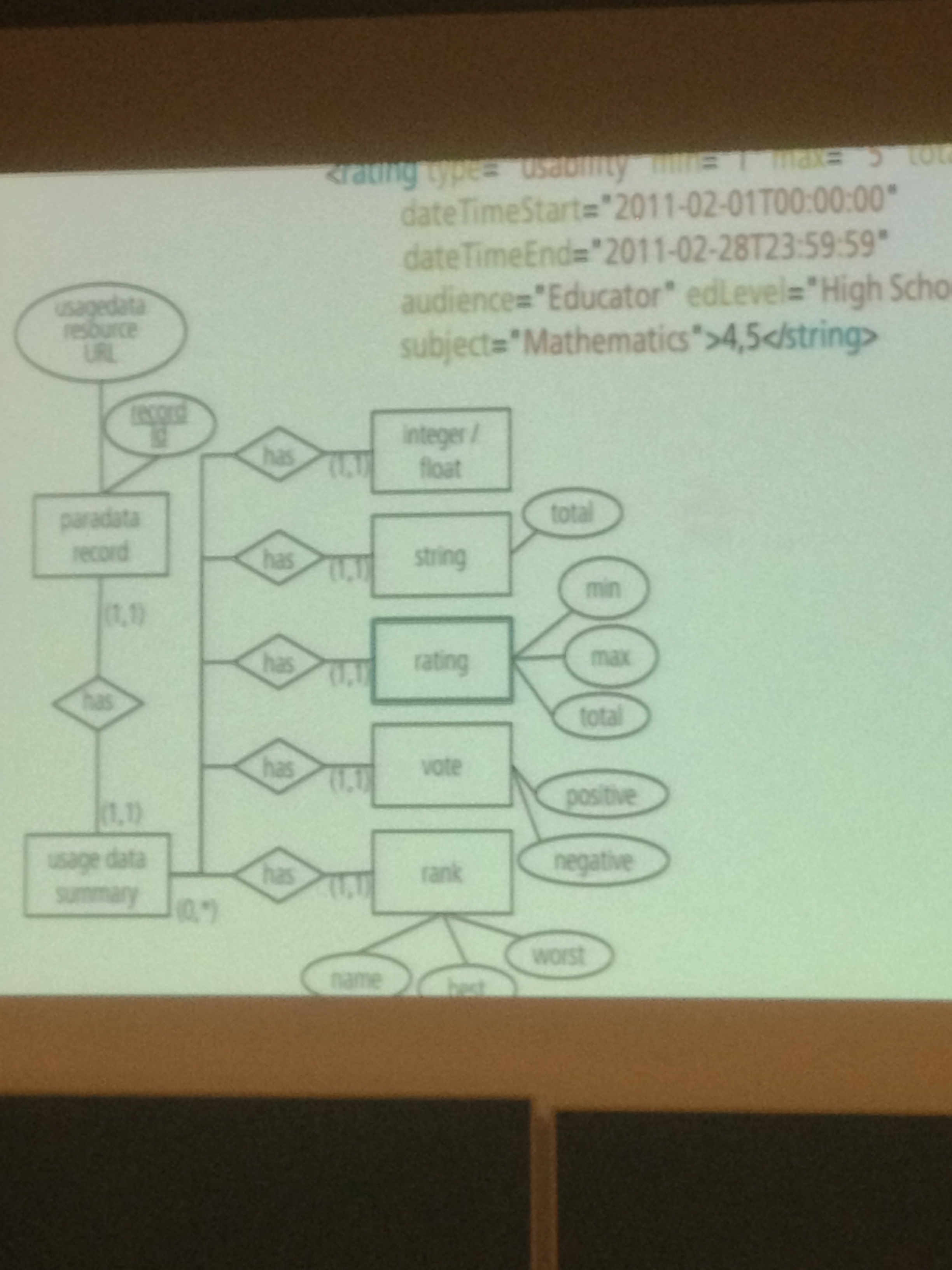

Next up a presentation on Aggregating Social and Usage Datasets for Learning Analytics: Data-oriented Challenges. Katja Niemann, Giannis Stoitsis, Georgis Chinis, Nikos Manouselis, Martin Wolpers

The project joined up three data sets (portals) and analysed for patterns which is an unusual approach. I’d have expected the questions to be identified first then choose the data sources but maybe I’m old fashioned that way. Am afraid I’m unfamiliar with the CAM POrtal but that’s the first to be analysed. Oh my. It’s all gone a bit information architectures. Not really my bag am afraid.

That’s the end of the session.

Last night I attended a SURF sponsored dinner where each course was accompanied with an analytics topic for conversation. We were asked to change tables as each topic arrived causing chaos for the restaurant serving staff but that aside was a challenging way of squeezing even more work from conference delegates. No rest for the wicked eh? Speaking of, here’s a shot of Shane Dawson as the starter topic is introduced.

Next up it’s a strem of Discourse Analytics. I’ve seen a bit of this before and it promised great things but didn;t really deliver at the time so here’s hoping things ahve moved on.

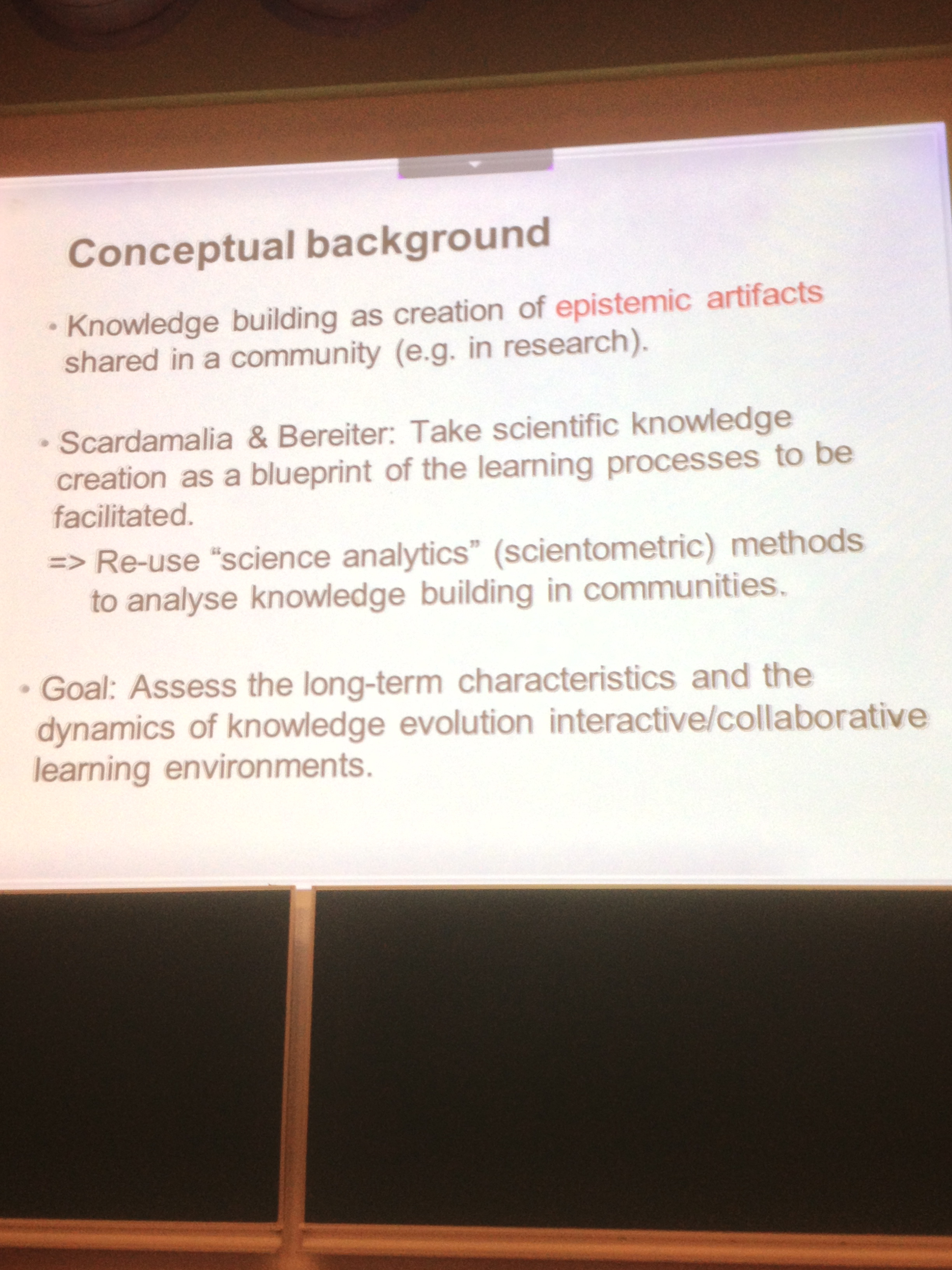

Analyzing the Flow of Ideas and Profiles of Contributors in an Open Learning Community. Iassen Halatchliyski, Tobias Hecking, Tilman Göhnert, H. Ulrich Hoppe (Full, Best Paper Nomination)

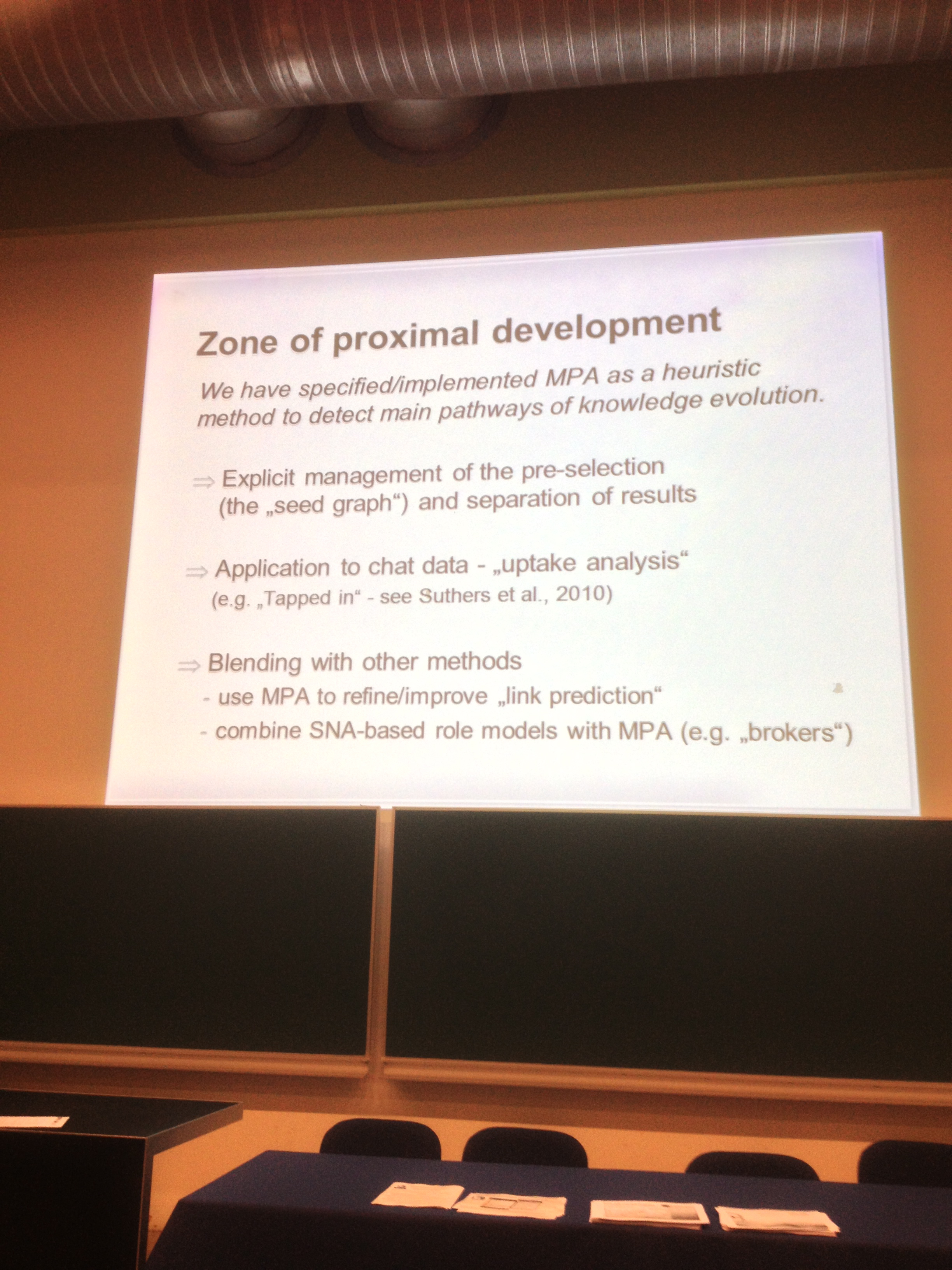

This one aims to characterise the flow of knowledge in knowledge creating communities using a tweaked version of Main Path Analysis. Full marks for mentioning the zone of proximal development. Am looking forward to that bit.

An example of collaborative knowledge construction is of course wikipedia.

Here’s the background

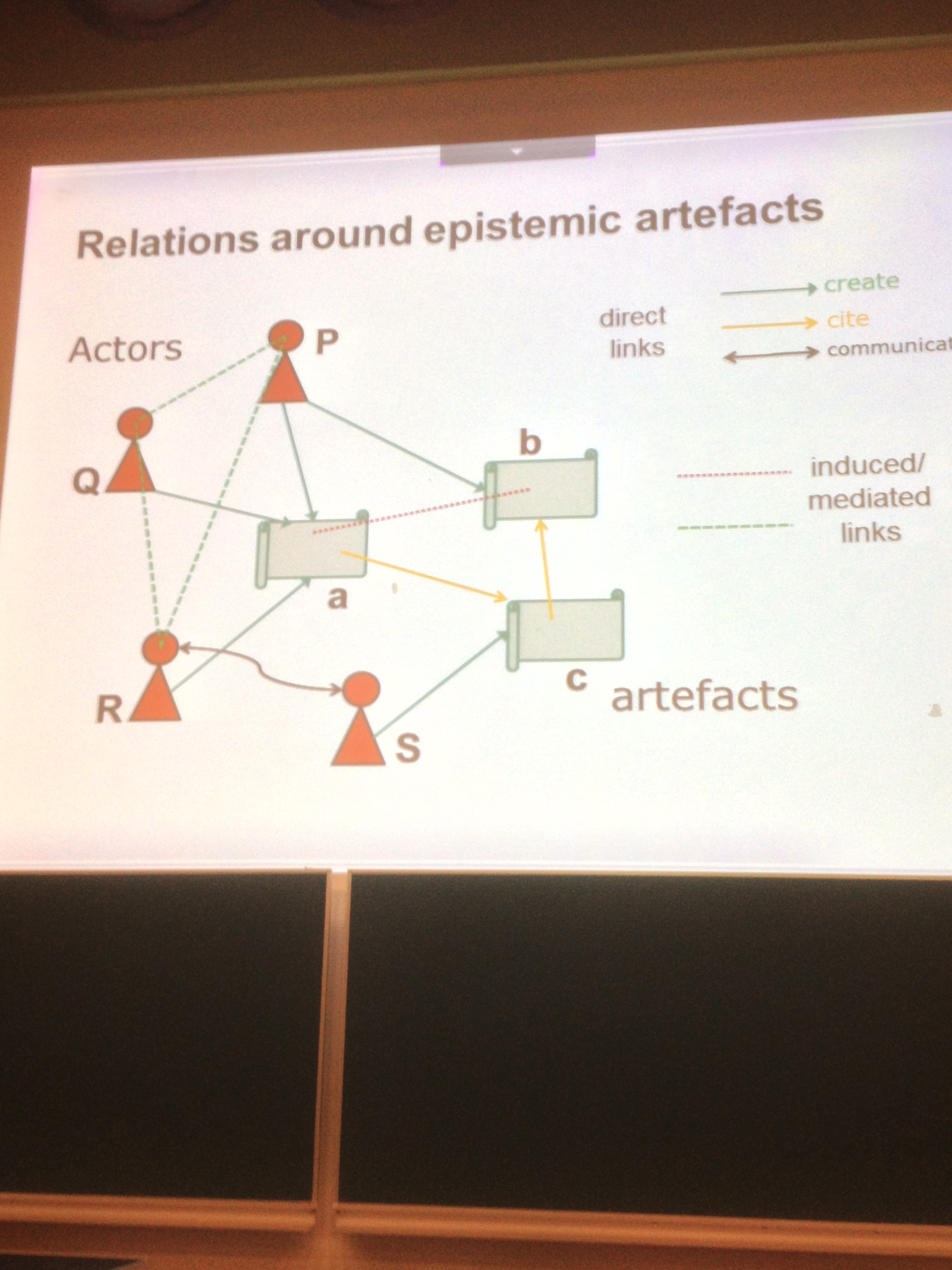

Here we see the relations between artefacts, actors and documents as knowledge

The path analysis is based on citation links. Here’s a slide on the main path analysis. Am afraid this one is getting rather deep for me. In the wise words of Sheila McNeil ‘what does it all mean’? Let’s hope for enlightenment.

I’m trying to drop onto sessions that offer real concrete systems / services with benefits but alas, they are proving elusive.

Remember, a DAG is a Directed Acyclic Graph, not a gypsy hound for hare coursing.

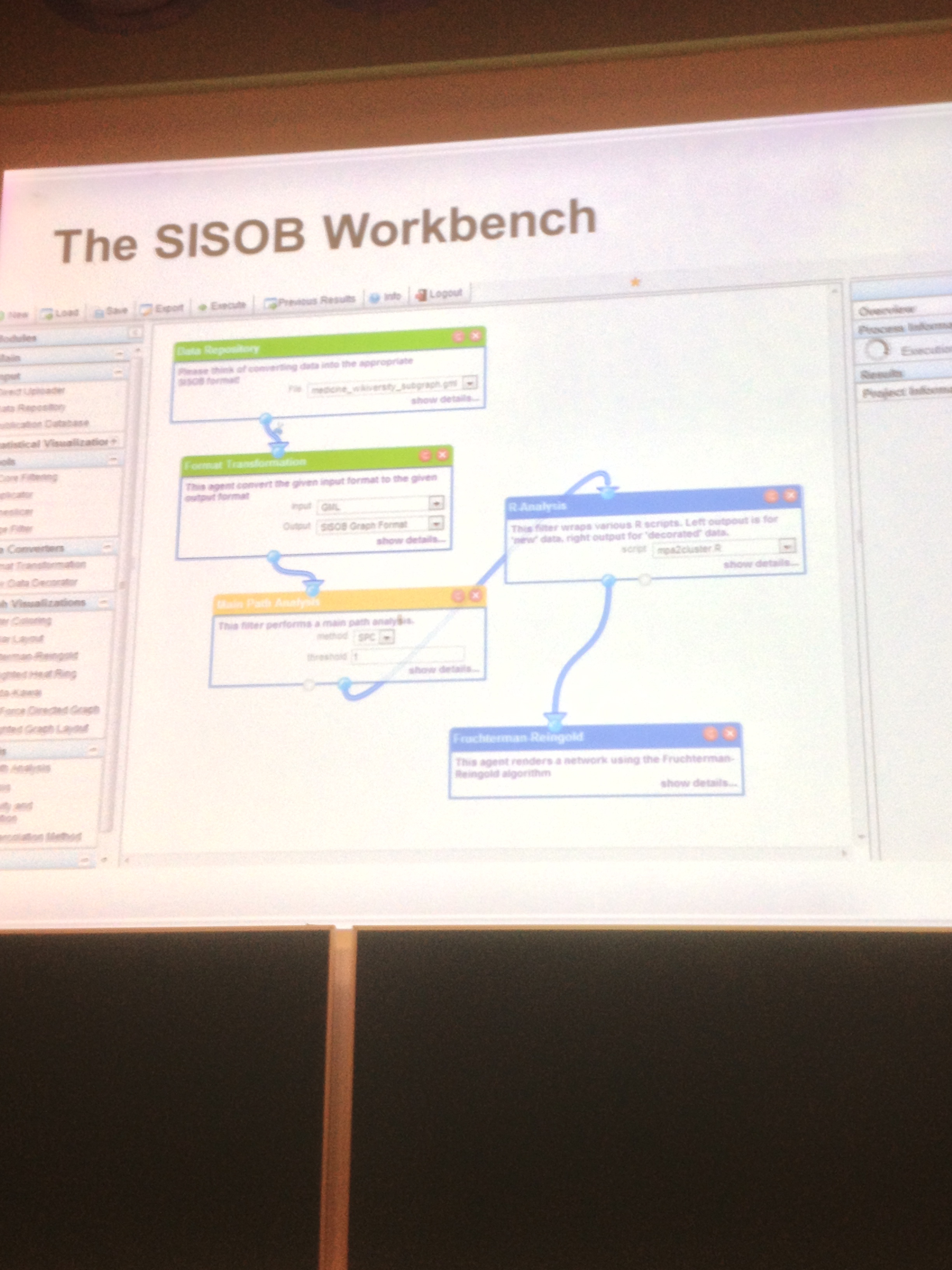

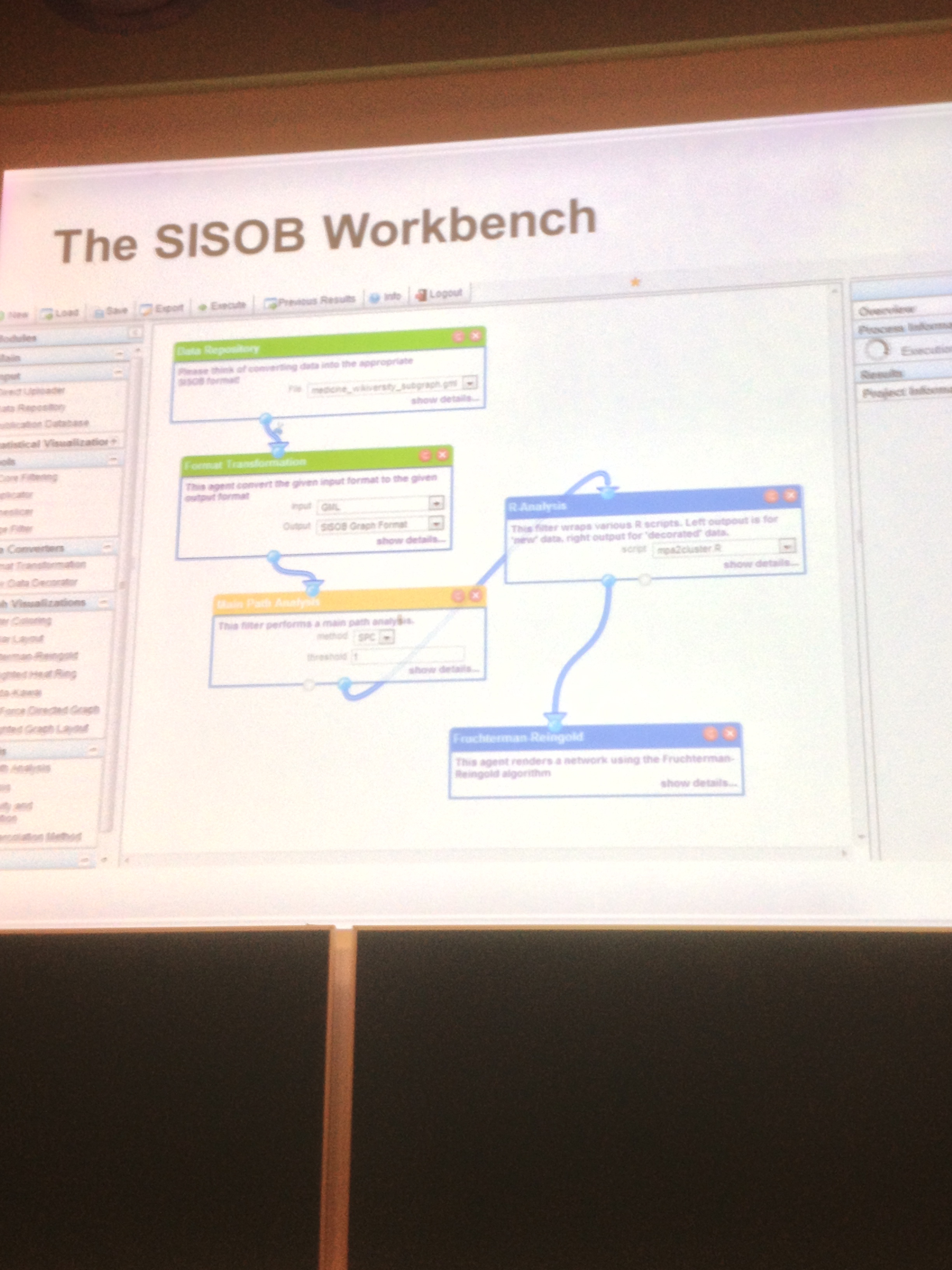

The presenter is giving us technical approaches to creating an analytic model. It’s a look under the bonnet. I think I’m more of a driver than a mechanic 🙂 Clever stuff though and nice to glimpse the mechanics of things. They’ve produced a ‘workbench’ for this sort of thing

And here’s that zone of proximal development I was drawn to earlier

Rather telling that the three papers in this track have been singled out as candidates for Best Paper. The conference isn’t seeking or rewarding systems in use with large student numbers or systems ready for wider uptake. Nor even systems ready for local uptake. It’s focused on theoretical underpinnings. One wanders who is bridging from these academic data scientists out to the vendors, the suppliers of best of breed corporate data systems for wider uptake. My suspicion is that that’s simply not going to happen. Though I’d be glad to be proved wrong. There aren’t even commercial sponsors here.

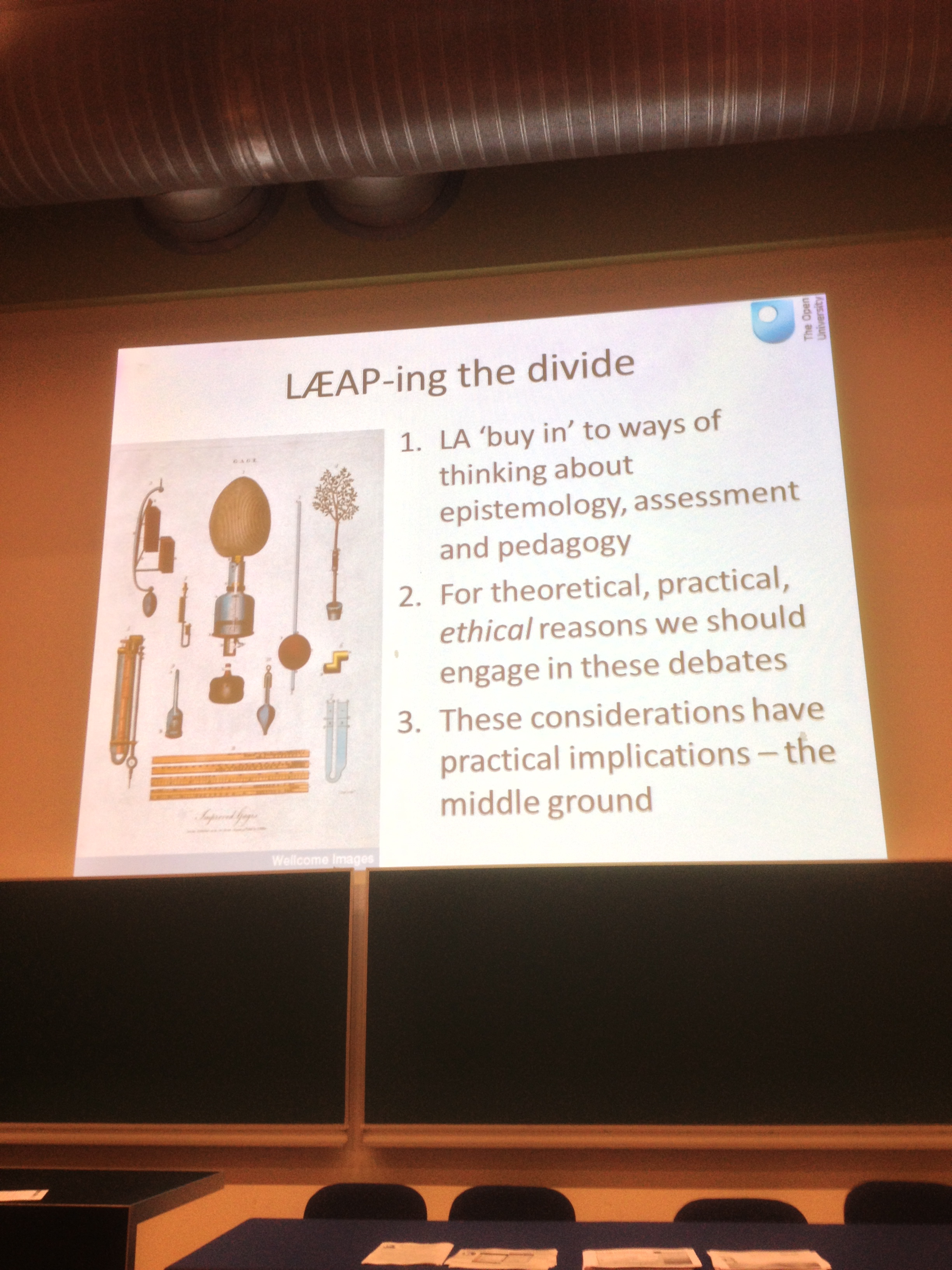

Next up we have Epistemology, Pedagogy, Assessment and Learning Analytics. Simon Knight, Simon Buckingham Shum, Karen Littleton

This one is a PhD paper on epistemology, assessment and pedagogy. Truth, accuracy and fairness are pillars of epistemology hence the application to assessment.

Denmark have opened up some exams to include internet access. Stephen Heppel thinks the UK exam system is fit for the 19th century not the 21st and that Denmark have it right.

Analytics might give us unprecedented access to seeing knowledge in action. Intriguing. A bold move in terms of assessment. I used to work in assessment and would caution that it’s a very visible and high risk area. While I can’t imagine the exam boards leaping into this just yet it is an intriguing concept.

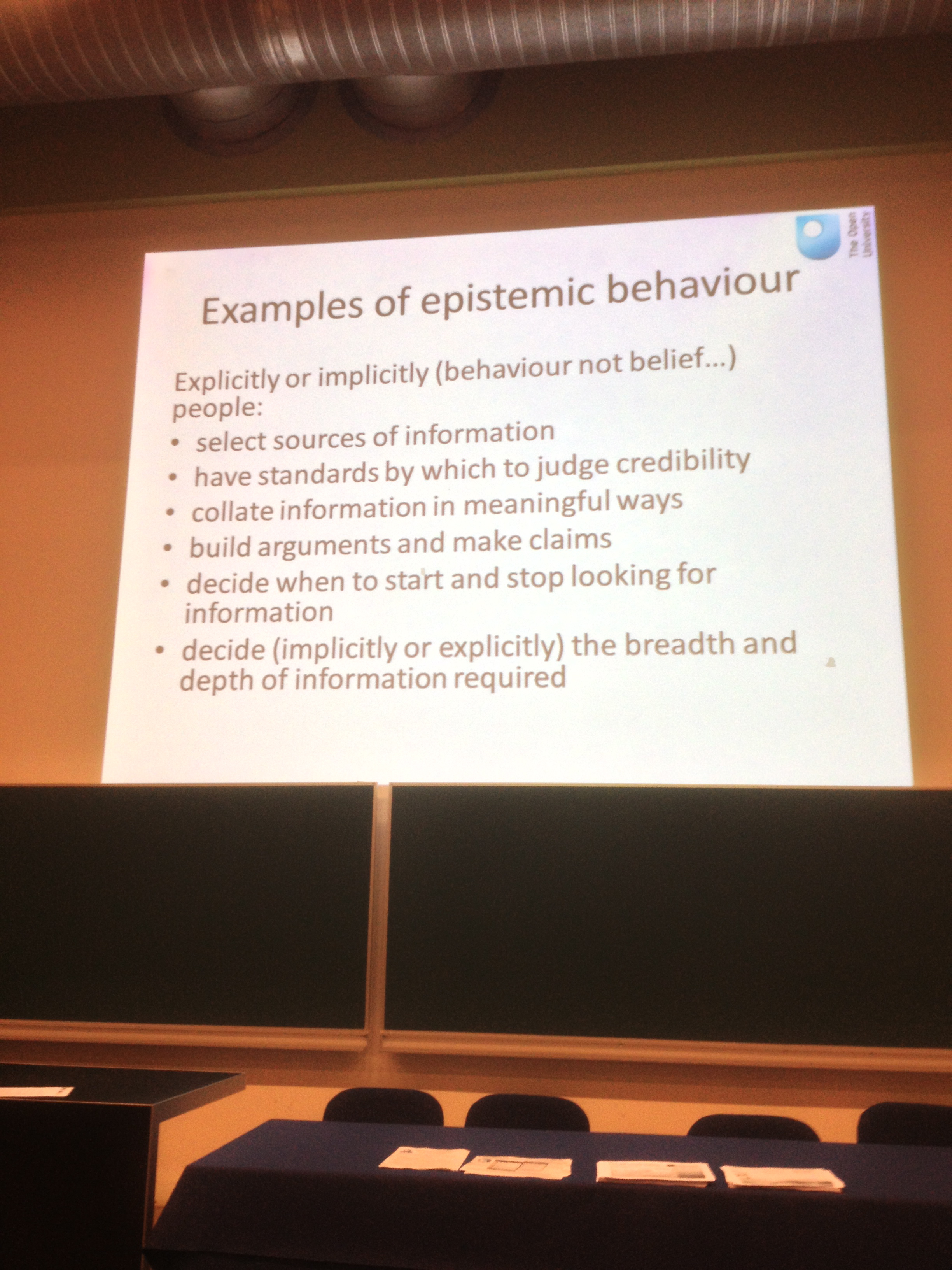

Here are a few examples of epestimologic attributes that students might demonstrate as knowledge in action as the basis for assessment. Also noting the likelihood of automating that assessment.

The team are interested in how people use dialogue as a frame for knowledge building. Natural language processing sits well with this with discourse as both context and c creator of context, the importance of dialogue in knowledge creation.

I’m rather drawn to this. In terms of potential to change the way we assess knowledge it seems on the face of it to have a great deal of potential. Nice work.

Last one for me today is An Evaluation of Learning Analytics To Identify Exploratory Dialogue in Online Discussions. Rebecca Ferguson, Zhongyu Wei, Yulan He, Simon Buckingham Shum

The mighty Rebeccas Ferguson is back with more analytics in online discussions. I heard a bit about this at the UK Solar Flare Jisc supported and Sheila blogged, and I think at LAK12. So it’s got provenance.

Rebecca is looking at discourse as language and dialogue are crucial tools in the generation of knowledge. Here are the three ways in which learners engage in dialogue

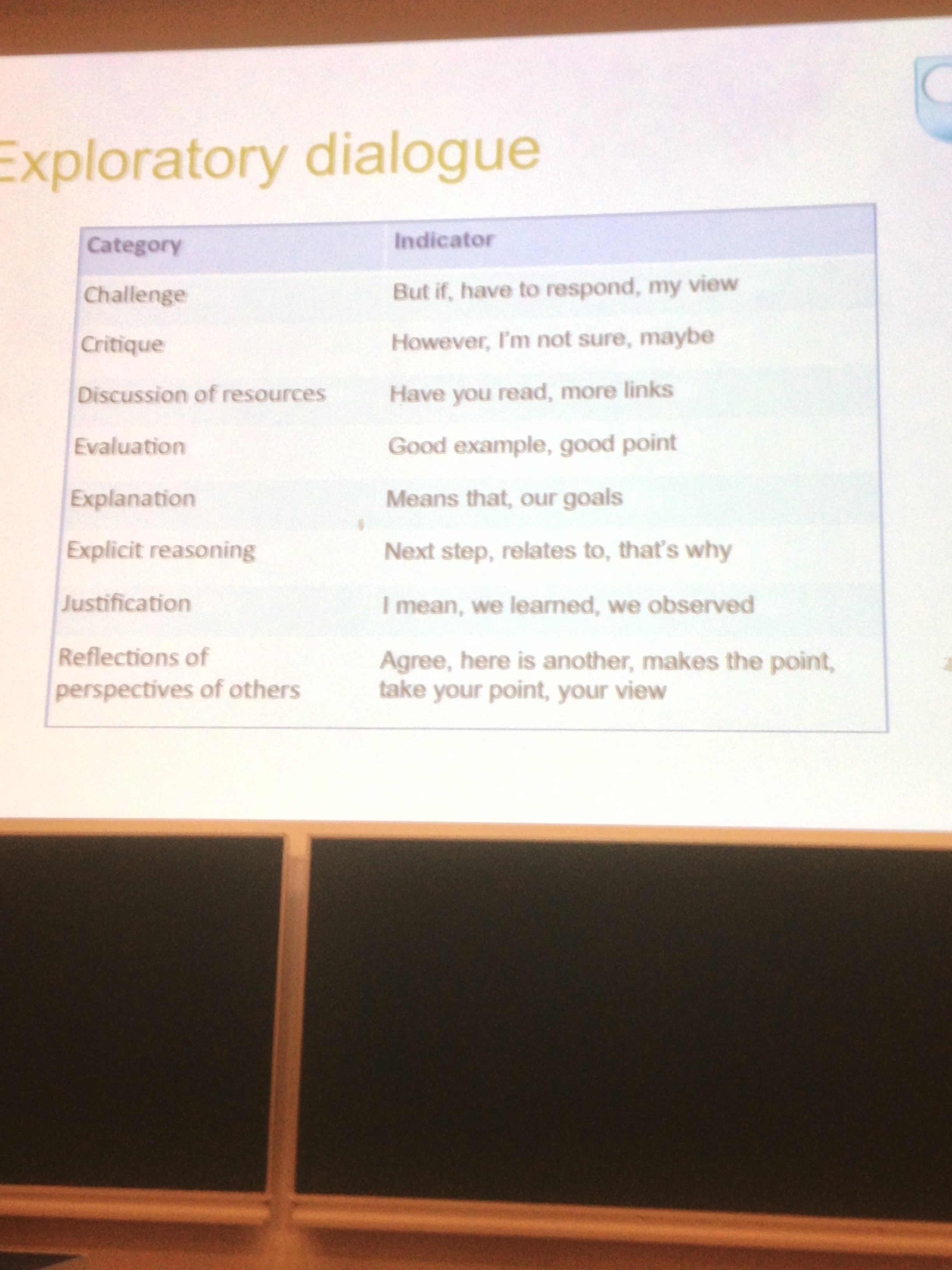

And here are the key characteristics of exploratory dialogue

Insert Slide

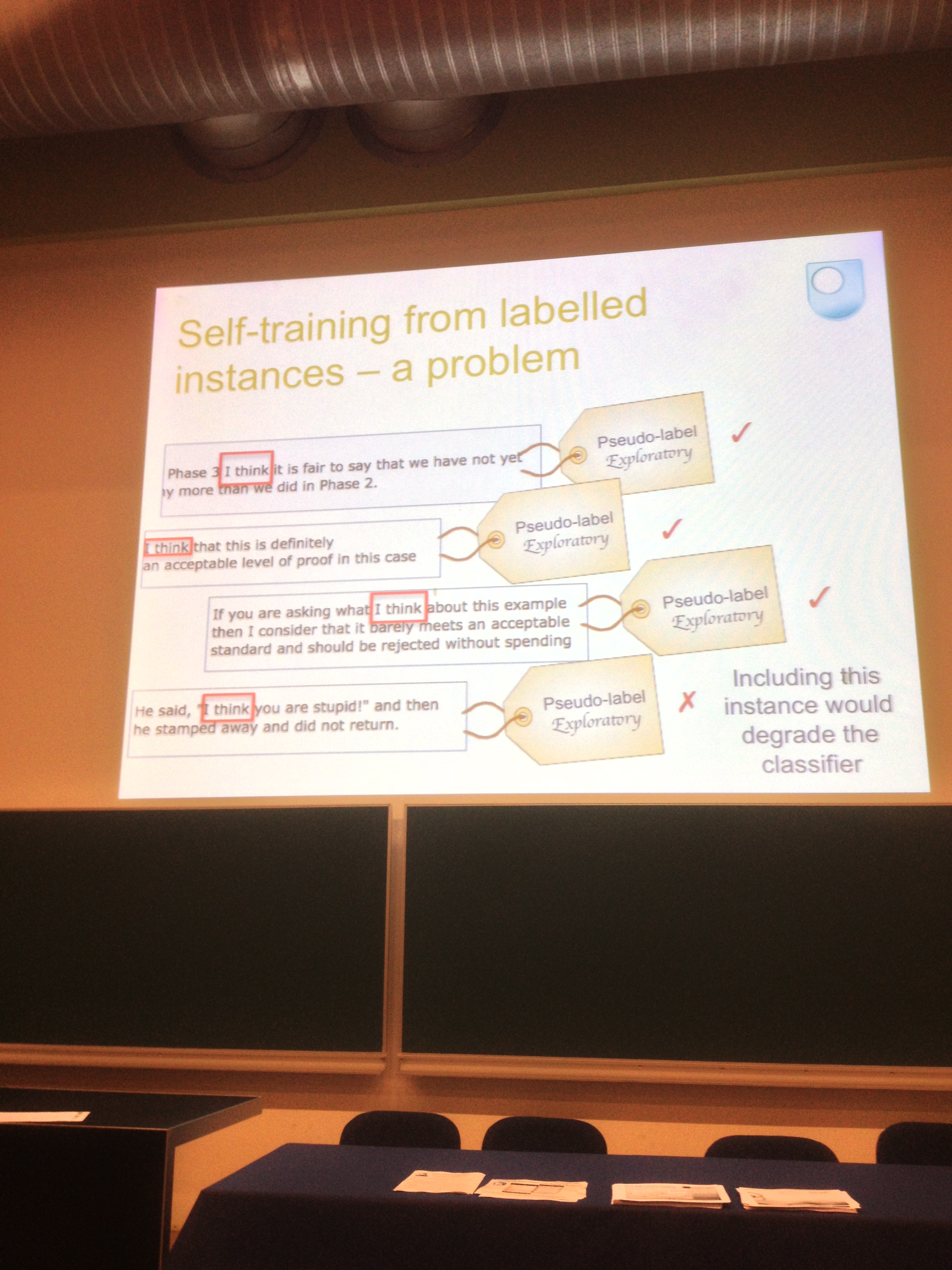

The method is very labour intensive so the team branched out and asked for input from computational linguists. They suggested a self training framework

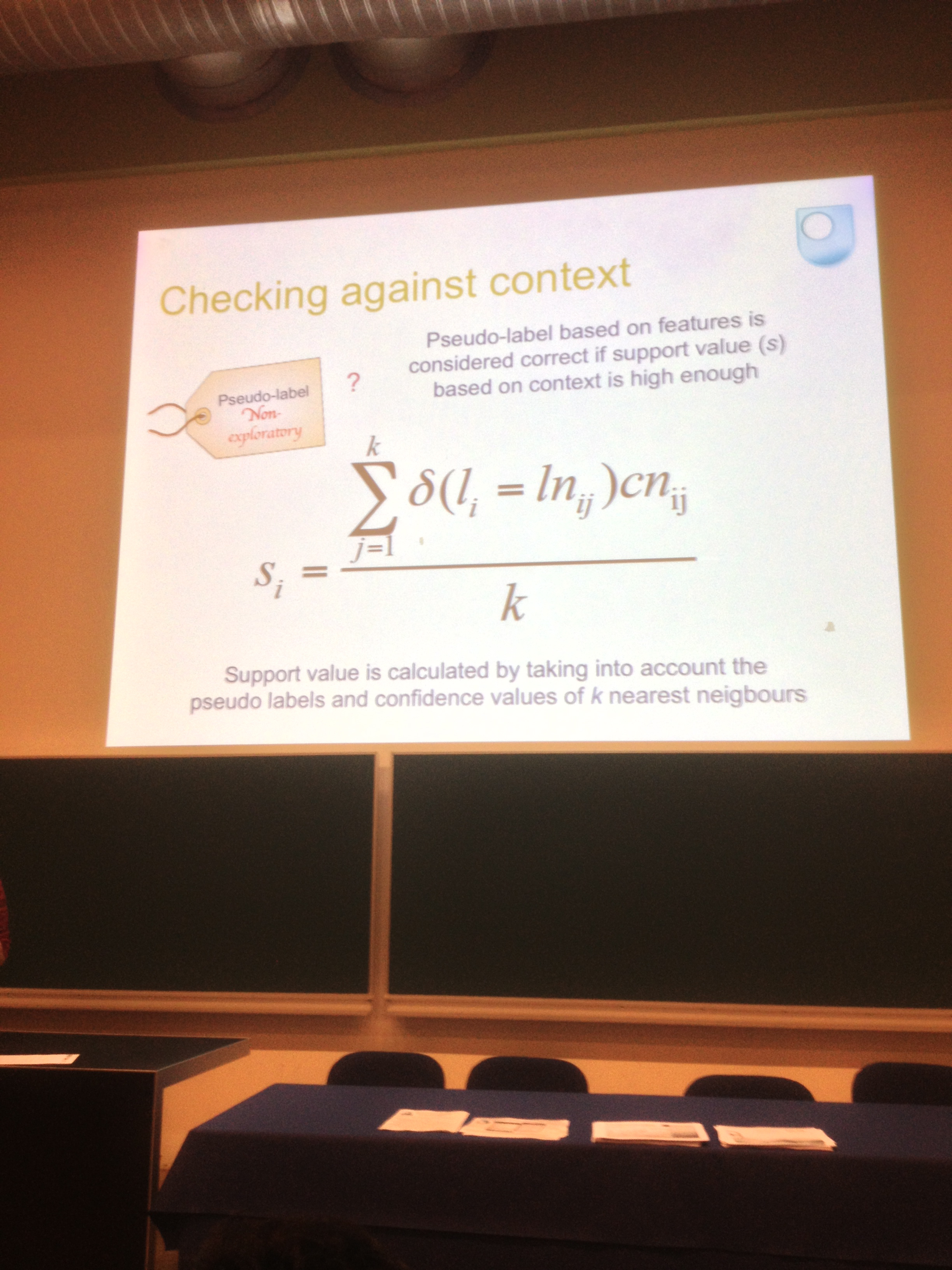

All goes well until the final instance! So they suggested a more complex method called labelled features. This was OK but needed to take into account the topic or context. These pseudo labels can be described by an equation

They took it further by analysing nearest neighbours, which helped determine whether the label is valid. Then threw in the original 94 phrases. The framework was then manually coded to determine whether they agreed with exploratory and non exploratory. Here’s a summary of the methods combined

And the difference? On reliability of identification the accuracy increased, precision showed a slight decrease, and on recall an increase.

The classifier allows the identification of knowledge generation. Pretty cool!

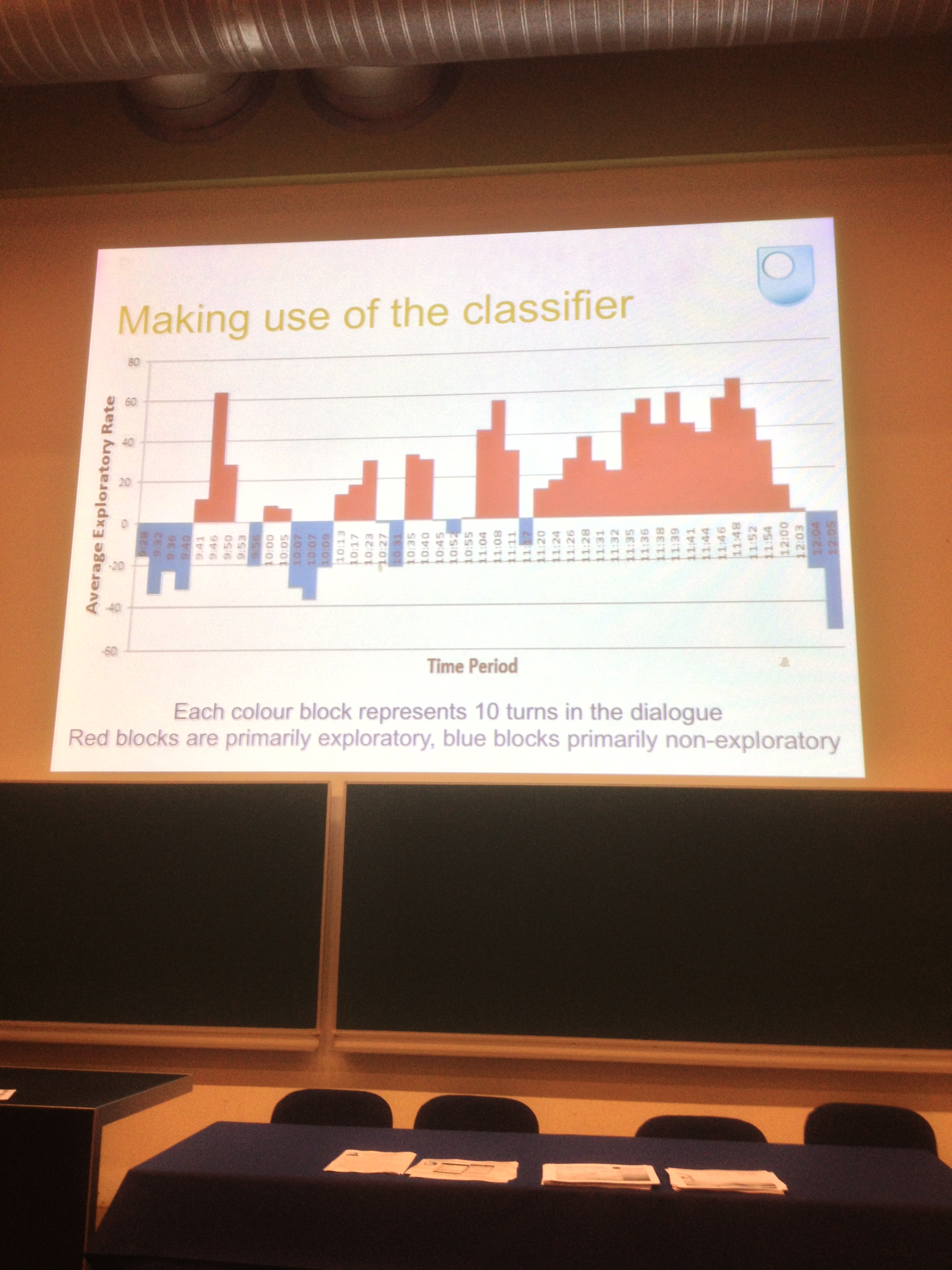

Insert Image

Red is knowledge creation, blue isn’t, height of peak is confidence and it’s run against real time elapsed. Also cool but even better it can atribute exploratory discourse by individual contributor. It can be used to motivate and guide people. It’s a real exam of the ‘middle space’ – cross discipline contributors coming together to do learning analytics. Here’s the conclusion slide

Here’s a more detailed Discourse Learning Analytics paper presented on Monday at LAK 13

End of live blog today as I’m involved in a Panel Session next. My slides are here.

Panel: Recent and Desired Future Trends in Learning Analytics Research. Liina-Maria Munari (European Commission), Myles Danson & Sheila MacNeill (JISC), Sander & John Doove (SURF).