The conference so far has been entirely plenary lecture based. I think we’re all looking forward to some new formats. First up we have an expert led discussion session. Our panel comprises Ora Fish, Stefano Rizzi, Bodo Rieger, Elsa Cardoso. Of the four I’ve managed to make contact with three regarding the work Jisc and HESA have planned on that national business intelligence service for the UK.

The panel are to comment on the following;

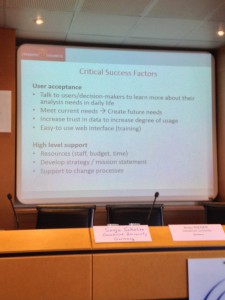

1. Critical Success Factors for BI in HE

2. Vision for BI in the next 5 – 10 years

3. Recommendations and expectations for EUNIS Services

My own comments are marked >

1. CSFs for BI in HE

Ora Fish – The CSF is down to the individuals involved in the initiative; their energy, adaptability, relationships and knowledge.

> Nice one Ora – capability of the team, leadership and their efficacy is clearly key. So what attributes does a successful BI service implementation require? What distinguishes this from other projects? Presumably quite a lot as this bridges the divide between IT and Business leaders, tackles cultural issues of data use, touches on records management and attempts to translate data into knowledge palatable to wide range of roles.

Hans Pongratz – agile systems and the business value they bring, how does it meet the needs of consumers (students, lecturers, managers)

Alberto – the content of the data warehouse – look toward employability of graduates as this is your final product

> absolutely true, but attributing graduate employability to a BI implementation seems a little on the tenuous side. Maybe here we’re touching on what are the data sources to assist in predicting employability, providing actionable insights and promoting the opportunity for people to take the actions. I quite like this as an alternative to analytics to predict success at University.

Elsa – People, strategy, technology and processes are the four areas. Ora covered people, map to institutional strategy, technologies should map to architecture, enhance and embrace processes to smooth the way

> Can’t faulty that as a response! From the floor a note of a Key Failure Factor being over reliance on a single person who became so integral the implementation became entirely reliant.

Steffano Rizzi – the importance of a functioning BICC and its role in data cleanliness and quality

> Definitely required capability but the BICC seems to me an ethereal entity comprising many different capabilities to smooth the way to BI uptake.

Ora Fish – The data warehouse is the key to success. Other system aspects can come and go.

> Isn’t that the case – the most difficult things are often a) getting your data (and processes) in order b) cultural change and people. Technologies merely enhance.

2. Next 5 – 10 years

A growth in demand and supply of data scientists capable of providing analytical services

> but also incorporation of data literacies in existing roles across the institution including senior managers

From the floor –

The UK Moodle community are looking (via University of London) at analytical insight across the 137 institutional Moodle instances. The panel are concerned about masking and security of data.

Analytical predictions for insight into likely employability is a capacity we might focus on as well as academic performance. Simply, based on student data exhaust across a range of indicators, what is the likelihood of good employability?

Ora Fish – Look to enhance the value we can bring to Universities, take the focus away from the technologies. More analytics, more ‘what if’ scenario solutions, better examples of Big Data value.

Stefano Rizzi – Delivery technologies (mobile), personalisation and recommendation services based on what others who ran this query did, collaborative business intelligence – a network of university consortia sharing data for BI

Incorporation of cross sector data to provide insight into future performance. In a nut shell analyse school, college and pre university data exhaust to provide insight into changes that could be made to enhance chance of success.

> Yeah yeah yeah…. Minority Report?! In the UK Schools already do a bit of this but pretty basically / badly. Do we really want to model success based on life experiences? Really?

And so my attendance at the EUNIS BI Taskforce 2014 event must conclude. I’ve a Eurostar to catch. It’s been a long old week. I left home on Monday and will be home tonight at 21.30. It’s Friday. I hope my presence here has helped others via the blogs. I hope the contacts I’ve forged are able to move to action with me and with Jisc and the Jisc / HESA BI Project.

It’s been emotional…