26 February 2013 and I’m attending a 1 day conference hosted by Simpson Associates on Dashboarding for Higher Ed. The tag for the event is #peakhe

Feeling really positive about this one. There are over 100 delegates and first impressions from coffee networking are some really interesting people are here. I already bumped into Gary Tindell of UEL, one of our Jisc Business Intelligence Stars Gary, Patrick and I co presented a session focused on the UEL national comparison / benchmarking BI system at UCISA CISG Conference in November (also blogged here). Gary told me the Jisc investment of just 50K allowed work that has paved the way to a 7 million pound initiative with local government. Not a bad ROI. Being the brave sole I am I have sat at a round table knowing no one. I’m flanked left and right by University Planners (members of the National Planners group) from St Andrews and Kingston. Vicky Goddard (former chair of National Planners) helped out with the development of our V1 BI InfoKit. I’m informed that the new Chair of the Planners group is Lucy Hodson (Aberystwyth), though Vicky Goddard (Liverpool) and Anita Wright (Liverpool) are still involved. Planners are a key target audience for our new BI InfoKit (due to launch April 2013) and our exisiting Analytics Series (2013, blog post here)

1. DVC Nottingham

First up we hear from the DVC of Nottingham and I’m pleased to recognise echos of issues, approaches, experiences and achievements we encountered in the Jisc BI Programme culimating in the new (launch April 2013) Jisc InfoNet BI InfoKIt. Descriptions of frustrations with access to the right information at the right time but also noting a tendency to focus on the aspects Nottingham are good at (over play strengths) and underplay weaknesses. The Management Information Hub has demonstrated the value of MI if collected, analysed and presented appropriately along progress to current strategic plan as well as working toward the next one. Access to the system is devolved beyond the executive board and a rosy picture has been painted. Top down commitment cannot be underplayed. Collection is one thing but meaning is imperative – how will the information be used? How do we convert complex business data to formats that are meaningful to varied staff roles. Postulating as to the deeper and broader challenges others will face;

Sense of purpose for the whole oprganisation (data relevance across the entire organisation and related to strategic plan)

Ensuring employee engagement in terms of;

Data literacies

Action planning

Engender trust in the information (data provenance)

Good MI can bridge board members perspectives vs what is really going on

Highlights what is going on but also why allowing informed decisions to be made to address these issues and enhanced targeting of resources, track them and identify when they have been achieved.

2. Simpson Associates

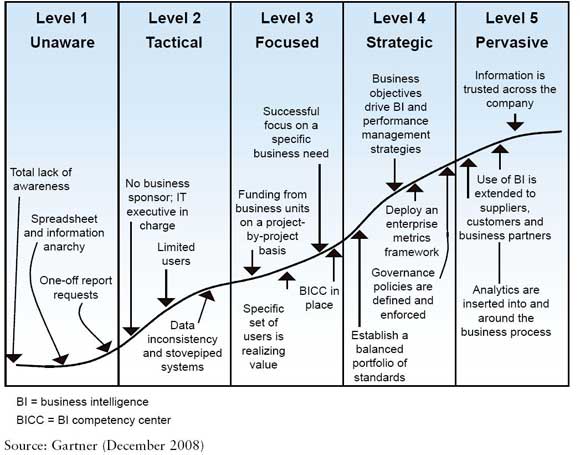

The organisers of this event provided some HE focused case studies identifying the breadth of their consultancy offer around Information Management covering business and technical expertise. They’re citing a BI Maturity Model. Turns out the one they use is the old Gartner model (clearly still valid). At Jisc we developed one of these 3 years ago and have moved our thinking forward so this has evolved into BI Implementation Stages in BI InfoKit 2. I’d like to think we’re a little ahead of the game in Jisc.

Simpson cite the Gartner (May 2012) BI, Analytics and Performance Management CIO Concerns as evidence that BI, Data Warehousing and related issues are current issues.

Simpson methodologies include BI, Scorecards, Data Warehouse, Collaboration, Budgeting and forecasting etc. while they use the Gartner BI Maturity Model which is quite old now (August 2010) and focused on organisational maturity rather than BI characteristics.

Simpson use this as the framework for their BI consultancy.

Delivering value to customers quickly and incrementally (across the maturity levels) using sound practice and best of breed systems (Cognos etc) is the Simpson approach using existing resources such as data warehousing.

A Gartner Level 2 example was a focus on student planning; organisational benefits are quickly demonstrated

Leves 3 example was a Student Progression module

More examples were discussed from outside the HE Sector based on higher maturity levels which makes me wander whether Simpson struggled to find Levels 4 and 5 in UK HE. I suspect this is more a reflection of their client list progression being ongoing, rather than the sector itself and we’ll perhaps hear more of this later in the agenda.

3 IBM Cognos 10 Platform

Slick presentation by IBM showing their existing dashboarding for Student Retention through Cognos 10. Student satisfaction, retention, efficiencies, cost savings and how technology can help. IBM focus is on ‘actionable insights’ a phrase we at Jisc use in our definition of Analytics. So moving from knowledge to action through insights via, presumably BI and perhaps analytics. We shall see.

A single slide presentation is being revealed showing Finding Relevant Information, IT Bottlenecks vs Siloed Users of Finance, Planning and Faculty. Dashboards were presented on Retention, Retention vs Target, Satisfaction, Segmentation, What if / Scenario Planning and noting that by giving the siloed users access to the dashboards the move to actionable insight is much quicker and more effective. Here’s a snippet from Jisc on Actionable Insight

“Analytics is the process of developing actionable insights through problem definition and the application of statistical models and analysis against existing and/or simulated future data”

A 25% figure of student attrition improvement was cited. I’ll chase up on the source.

Steven Few has been cited as a primary data source (book author) for BI and Visualisation and Data Warehousing.

4. Case Studies: Warwick University on Business Analytics

Warwick seem to be up at level 5 or the Gartner model and at the highest end of our own – that of accurate predictions and forecasting. Cognos 10 and Tableau are the current technologies but they use the Gartner Magic Quadrant and state they are quite mercenary about their choice of technologies and not wed to any single supplier.

‘Experts often possess more data than judgement Colin Powell

‘It is a capital mistake to theorise before one has data’ – Sir Arthur Conan Doyle

Introduces the concept of ‘Signal Advantage’. Using BI / Analytics / MI to aid competitive advantage is critical in the current environment. Smart organisations embrace it. – Warwick.

A number of critical paths to success were revealed, in particular the appointment of an analyst and a superb relationship with the department responsible for corporate information. A recent development is ‘pumping’ data from HESA Heidi (presumably the ‘open’ API) directly into their own data warehouse. Warwick also revealed difficulties in recruitment to support BI and an 11% growth forecast in the industry by Gartner. So a role here for HEI to provide skilled graduates presumably.

Velocity of data was touched on – some data is real time, some is annual (HESA). Am awaiting to hear about Volume and Variety (3Vs of Analytics). Warwick are about to start analysing social media so entering the world of Big Data.

Warwick noted that ‘reports’ might be consumed across a spectrum of operational to strategic.The talk will be more about the strategic end.

Warwick analyse every individuals research performance. In the Jisc BI Programme University of Bolton undertook a work load model BI tool while Huddersfield and Glasgow examined research performance issues and all three produced case studies. Have a look at the Jisc InfoNet Case Study Wiki.

Nine considerations for Data Visualisations were presented based on work by XXX

Warwick shared their Analytics Journey plans from 2006 – 2016 starting with student number forecasting through research income forecasting, HR Reporting, HESA Benchmarking, space dashboards and more with plans to develop social media and predictive analytics to understand the student journey, student view point, attainment, risk of non completion. The latter I know as Learning Analytics. It has its own conference (LAK 2013 Leuven, April 2013) and research association (SOLAR). Warwick aspire to predict employability of undergraduates and issues across all aspects of the business. Most impressive scale and achievements here.

Analytics has been embedded in process through the challenge of departments via the dashboards.

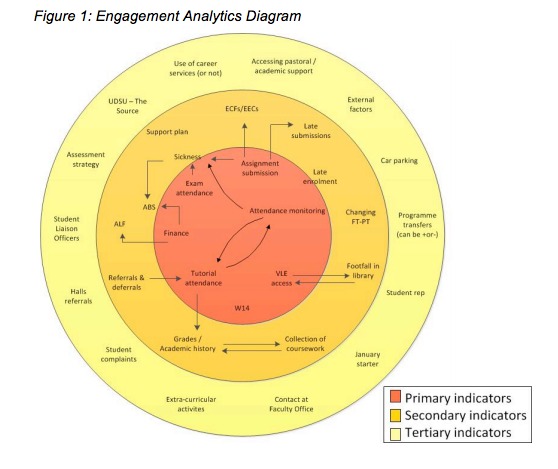

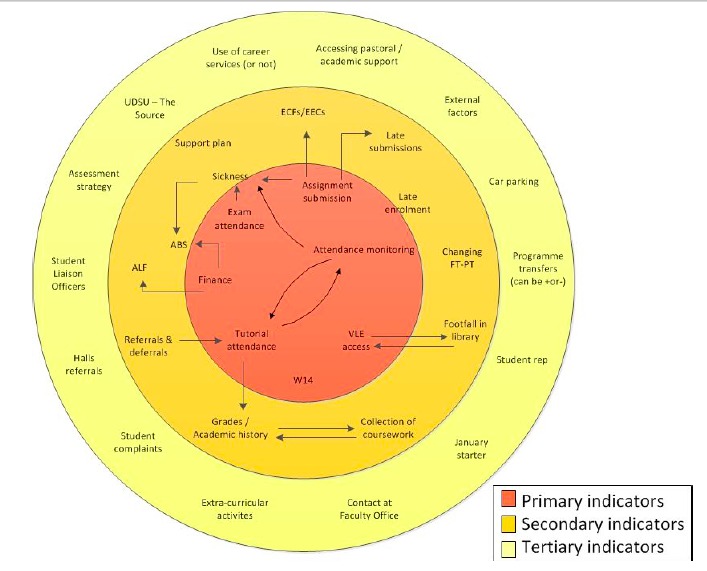

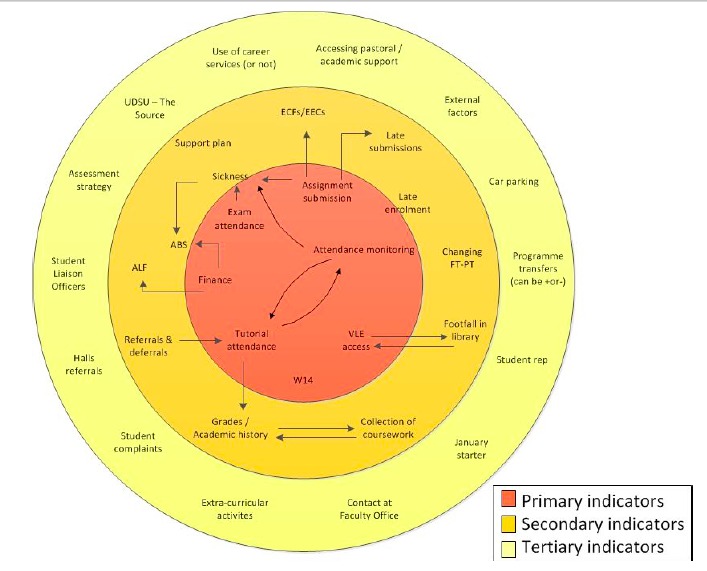

A mention here to student campus services analysis via Till data, Cashless records and customer demographics. You may not be sure why one would go to this detail on shopping facilities but I know this as ‘Engagement Analytics’ which can help enhance the wider student experience and provide tertiary indicators of attrition and is the subject of Jisc primed work at Derby University

Warwick displayed a series of dashboards showing performance trends for research as applications and awards and able to drill down through departments to individual staff. This helps the staff retention strategy. There’s nowhere to hide at Warwick! The Jisc funded BI Project at UEL has similar dashboards including HESA Data so allowing national benchmarking. See the aforementioned case study wiki and presentation (the CISG 2013 conference web site has a video of that presentation).

Space shows a traffic light of usage. Sometimes these back up the obvious. Later lots of 17.00 – 18.00 are very poorly used. Wednesday afternoons are very poorly used. They probably knew this already – an example of development to give answers you already have I suspect.

NSS (National Student Survey) is as one would expect but allows the ‘challenge’ of departments (and presumably targets for improvement).

Success factors include (again)

Leadership buy in, develop and retain skills, stakeholder involvement, links to strategy, embed in practice, data security.

5. Case Studies: Nottingham MI Hub

Well, it’s Nottingham, but not as we know it. Second presentation from Notts, this time about the previously mentioned MI Hub at the centre of the success we heard about earlier. Liverpool developed a BI Competency Centre as part of their Jisc funded BI Project and am guessing this will be a smilar concept. See the case study wiki for more on that.

Forst note of action is that reporting against flawed strategies is itself flawed. So undertaking an ‘As Is’ analysis is important. We found that reflecting that against the maturity model characteristics, identifying a desirable ‘to be’ state (matched against strategy) helped gain leadership buy in and a better chance of success.

Can you sail under a Pirate? The only rules that matter are what a man can do and what a man can;t do (Captain Jack Sparrow)

Project principles included doing the work right and once, user centred design, latest thinking in data visualisation, minimal training and support for users of the MI. Potentially useful throw away comment; ‘There’s no point in working with the luke warm, they’ll come on board eventually’.

As one might imagine this presentation reflects heavily on the previous Nottingham one.

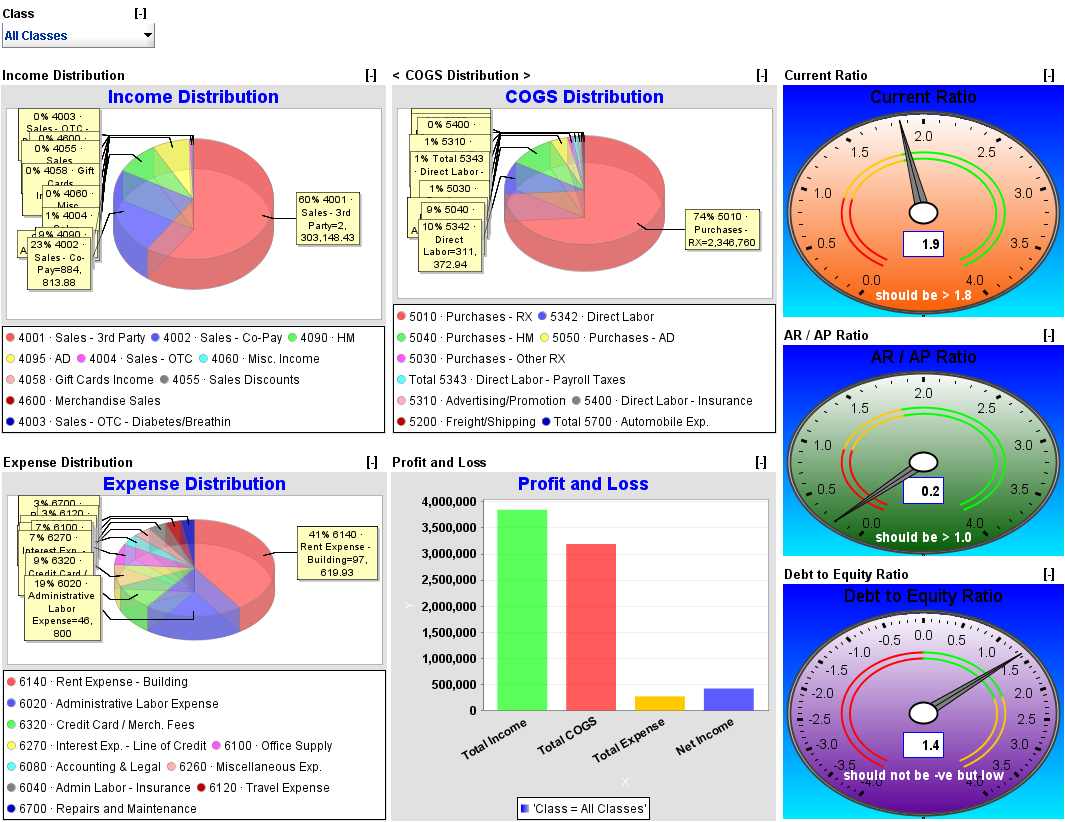

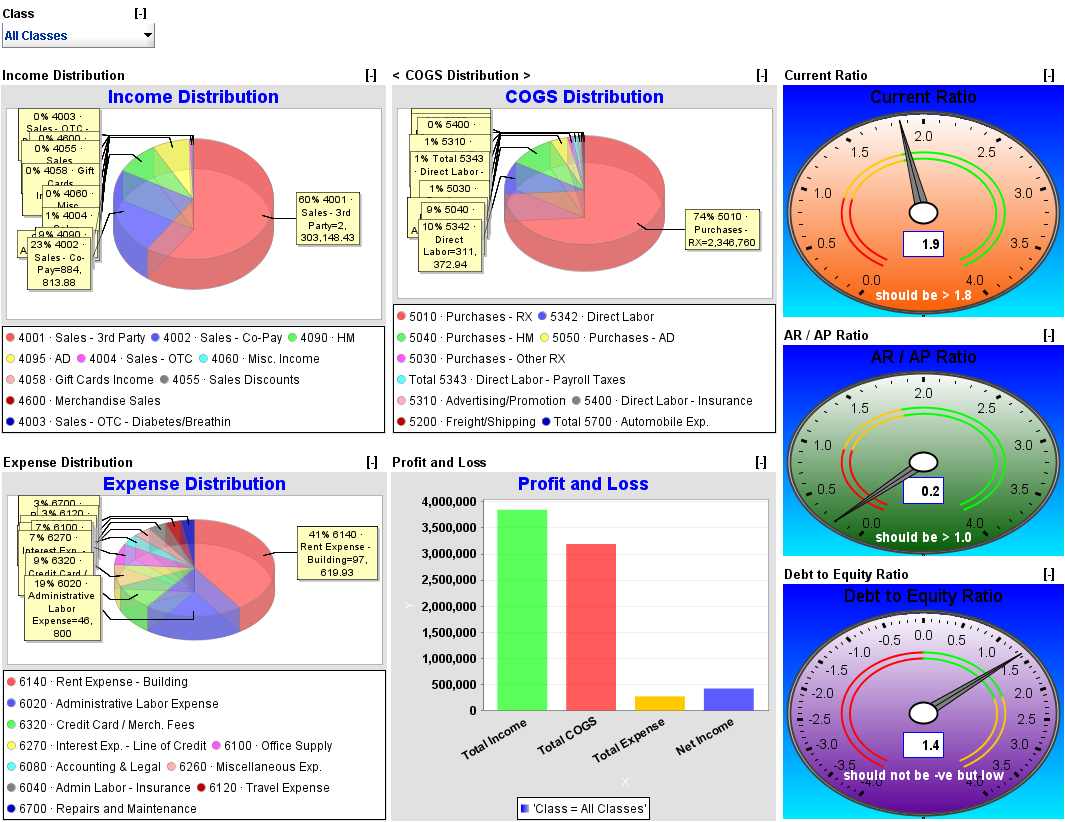

Much advice here about warehousing and visualisations (avoid pie charts, gauges and superfluous colour, draw attention to key information, apply ruthless consistency). Not sure I agree with all of this to be frank – better perhaps to work with end users as co-producers. Beware – MI Users have little experience of BI Systems. Next steps are predictive modelling (that’s long hand for Analytics)

We were next presented with some detailed examples of Nottingham Dashboards. Nice use of presentation software to highlight detail of these complex dashboards – have seen Prezi do this effectively. THe importance of grey scale majority using colour only to draw attention to key summaries.

Applications data is pulled into the warehouse fortnightly (nice issue of velocity of data).

Domicile of applicants shows that chemistry is the majority, the dashboards can show whether this is a wider trend and actionable insight presumably becomes evident – is there a wider untapped market here and why? Student type is updated weekly, the aspiration is to do this daily (bot no justification of why one would need this, I’d advise caution that one doesn’t get over enthused by the possibilities here)

Benchmarks, benchmarks, benchmarks. Everyone loves a good benchmark. Lots here, lots at Warwick and lots in the Jisc work on BI to date.

Next up we have a series of breakout sessions intended to be interactive so I propsoe to share the titles here and perhaps some findings if not already covered previously.

Breakout 1. Developing Dashboards: Sharing notes from your experiences

A panel discussion about moving your dashboard projects forward whatever your maturity level.

Based on the aforementioned Gartner Maturity model a show of hands revealed that within participants levels 2 and 3 prevale, no one was at level 1. 2 or 5.

Next we were asked a series of question

Q1. What advice would you offer to those at earlier stages, which seemed a shame given these folks have already achieved the transitions required. Responses included;

Senior Manager buy in

Deliver results quickly in the project

Q2. Technology issues for success

Clarity vs simplicity, relentless consistency (of screen furniture), demonstration of benefits and relevance to roles

Give confidence in the data by providing reports that end users are used to. Give them what they think they want as well as what the dashboard can achieve

Put BI on top of BI – how is the dashboard being used, how are they using it, where to focus developer energy

Don’t underestimate the degree to which people feel threatened

Q3. To what extent are dashboards made available to externals

Little evidence of this but more expected this afternoon

Q4. How to control information architecture eg renegade staff, systems and data collections

Much discussion here, governance, provide better systems, educate staff, centralise, take small steps toward an enterprise data warehouse etc

Q5. How to win the user demand for whizzy widgets over wrt simple visualisations

Have courage in your convictions

Prescribe their experience, provide no option

Q6. How do you prioritise demand for features?

Strategic priorities take precedent in terms of broader themes eg research, student experience, admissions based on evidence of challenges, capabilities, pay back etc

Make your financial people happy – they hold the purse strings to the University

Get senior manager involvement in setting priorities, highly important to show them what is possible, don;t just ask them what they think they want, help work out what they actually need, do mock ups, hone in on a priority and don’t start too big

Don’t get bogged down in data quality issues, you may spend an age solving them and achieve no BI at all

Know the enemy

Q7. Is there potential for a shared service for BI dashboards

Incredibly difficult. Every institution has a different set up so there’s no one size fits. There is opportunity in knowledge sharing in terms of what is possible in analysis. The assets to commercialise are knowledge ones so difficult to achieve

What does competition mean in HE – would we want to agree a standard approch to MI in HE? It would be hard to find common ground due to differing capabilities, respurces, aspirations. Why would levelling out BI capability make economic sense?

Breakout 2. Student Populations in Income Forecasting: Different approaches to common challenges

Well attended session perhaps indicating a widely felt issue. Demonstrations of different dashboards. One you had to be there for really! Nice idea of personal sandbox to allow users to experiment with their own reports.

6. Arizona State Case Study, John Rome

73K students, largest in the US, big issues around student experience.

Focus here is on enabling student success. Reliance on a student portal which includes performance dashboards and an analytics driven ‘eAdvisor’ – a course recommendation service based on competency requirements. Claims 84% retention rate.

Data warehouse is mature and ‘vast’ including activity data (to granularity of clicks recorded on web systems), residential / life data (how is their room mate doing) and much more.

Claims a data warehouse started kit (vendor supplied solution) can only achieve 35% of capability.

Various dashboards shown here, impressive scale of implementation, many seen already today.

Several triggers for an ‘outreach event’ described – if a student requests a transcript they’re probably thinking of leaving. Students who don’t post a personal photo are some of the most at risk of withdrawing etc

Financial records are included so students can see any outstanding issues, presumably this is also an outreach trigger event

A data driven culture has been created, policies security and data governance are included

4% of IT staff are BI and data warehouse people and this includes students as employees

7. The art of data visualisation, Andy Kirk

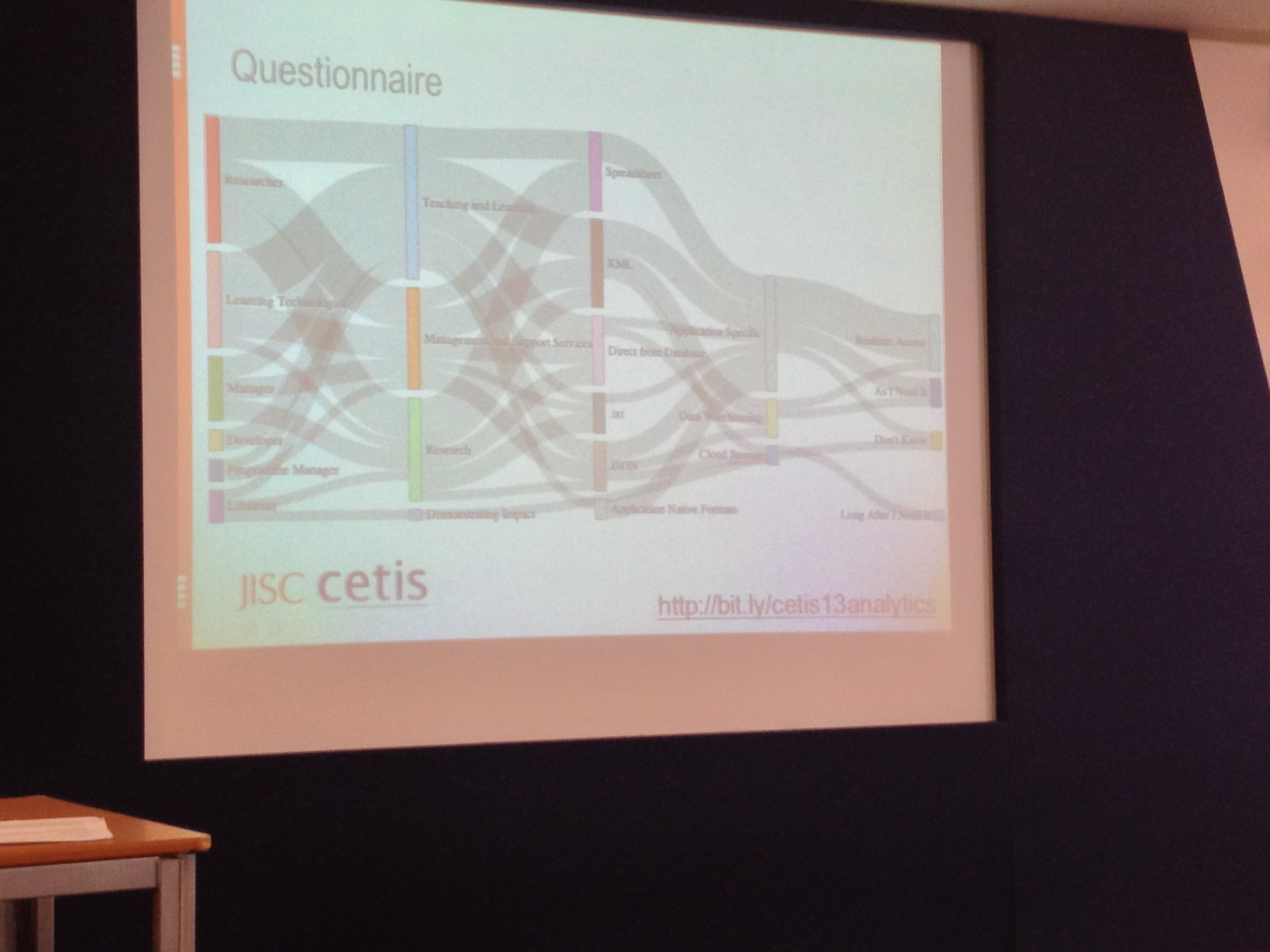

Andy has a book out. He’s from an analytics background and introduces visualisations beyond the bar graph. As one might expect – this was extremely visual! Nice work Andy but not something I can record here. Reminds me of some of the work I’ve seen @psychemedia undertake. You’d probably get on well.